Original Text: What do I think about biometric proof of personhood?

Author: Vitalik

Translation: Qianwen, bayemon.eth, ChainCatcher

Special thanks to the Worldcoin team, Proof of Humanity community, and Andrew Miller for the discussion.

- Three Stages of LSDFi Evolution From Liquidity Staking Protocol to Diversification of Collateral

- LianGuai Morning News | Worldcoin issues new NFTs to commemorate token launch

- Analyzing Unibot Data Static data is attractive, but the main revenue comes from $UNIBOT transaction taxes.

The Ethereum community has been working on building a decentralized solution for proof of personhood, which is one of the more challenging but potentially most valuable small tools. Proof of personhood, also known as the unique person problem, is a limited form of real-world identity that asserts that a given registered account is controlled by a real person (and a different real person from other registered accounts), ideally without revealing which specific real person it is.

There have been some attempts to solve this problem, such as Proof of Personhood, BrightID, Idena, and Circles. Some of them have their own applications (usually UBI tokens), and some are used in Gitcoin Grants to verify which accounts are eligible for quadratically matching funds. Zero-knowledge technologies like Sismo add privacy to many such solutions. Recently, we have seen the rise of a larger and more ambitious identity project: Worldcoin.

Worldcoin was co-founded by Sam Altman, who is known for being the CEO of OpenAI. The idea behind this project is simple: artificial intelligence will create a lot of wealth for humanity, but it may also cause a lot of people to lose their jobs. It will become almost impossible to tell who is human and who is a robot, so we need to plug this loophole by:

- Creating a very good identity system so that humans can prove that they are indeed humans;

- Providing interest-free loans to everyone.

What makes Worldcoin unique is its reliance on highly complex biometric technology, using a dedicated hardware called Orb to scan the irises of each user: their goal is to produce a large number of Orbs and distribute them widely around the world, placing them in public places so that anyone can easily obtain an ID. It is worth noting that Worldcoin also promises to become more decentralized over time. Initially, this means decentralization in a technical sense: becoming an L2 on the Optimism stack on Ethereum and using ZK-SNARKs and other cryptographic techniques to protect user privacy. Later, it will also include decentralization of the system’s governance.

Worldcoin has been criticized for privacy and security issues with Orb, design issues with its token, and some ethical choices made by the company. Some of these criticisms are very specific and focus on decisions that could have been easily made differently – in fact, the Worldcoin project itself may be willing to change some of these decisions. But there are also more fundamental questions raised about the use of biometric technology – not only the eye-scanning biometric technology of Worldcoin, but also simpler ones like face video uploads and the verification game used in Idena – whether it is a good idea. Some people criticize all forms of proof of personhood, seeing risks including inevitable privacy leaks, further erosion of people’s ability to browse the internet anonymously, coercion by authoritarian governments, and the potential impossibility of achieving both security and decentralization.

This article will discuss these issues and help you decide whether it is a good idea to bow down and scan your eyes (or face, voice, etc.) before this spherical god, and whether it is better to use personality proofs based on social graphs or to completely abandon personality proofs.

What is personality proof and why is it important?

The simplest definition is: it creates a list of public keys, and the system ensures that each public key is controlled by a unique human. In other words, if you are human, you can put a key on the list, but you cannot put two keys on the list, and if you are a robot, you cannot put any key on the list.

Personality proof is valuable because it solves many problems faced by people, such as anti-fraud and anti-centralization of power, avoids reliance on centralized authorities, and discloses information as little as possible. If the issue of personality proof is not resolved, decentralized governance (including micro-governance, such as voting on social media posts) is more likely to be seized by very wealthy participants (including hostile governments). Many services can only prevent denial of service attacks by setting access prices, and sometimes the high prices that are enough to deter attackers are too high for many low-income legitimate users.

Many major software applications in the world today solve this problem by using government-supported identity systems (such as credit cards and passports). Although this solves the problem, it makes a huge, perhaps unacceptable sacrifice in terms of privacy, and the government itself can launch negligible attacks on it.

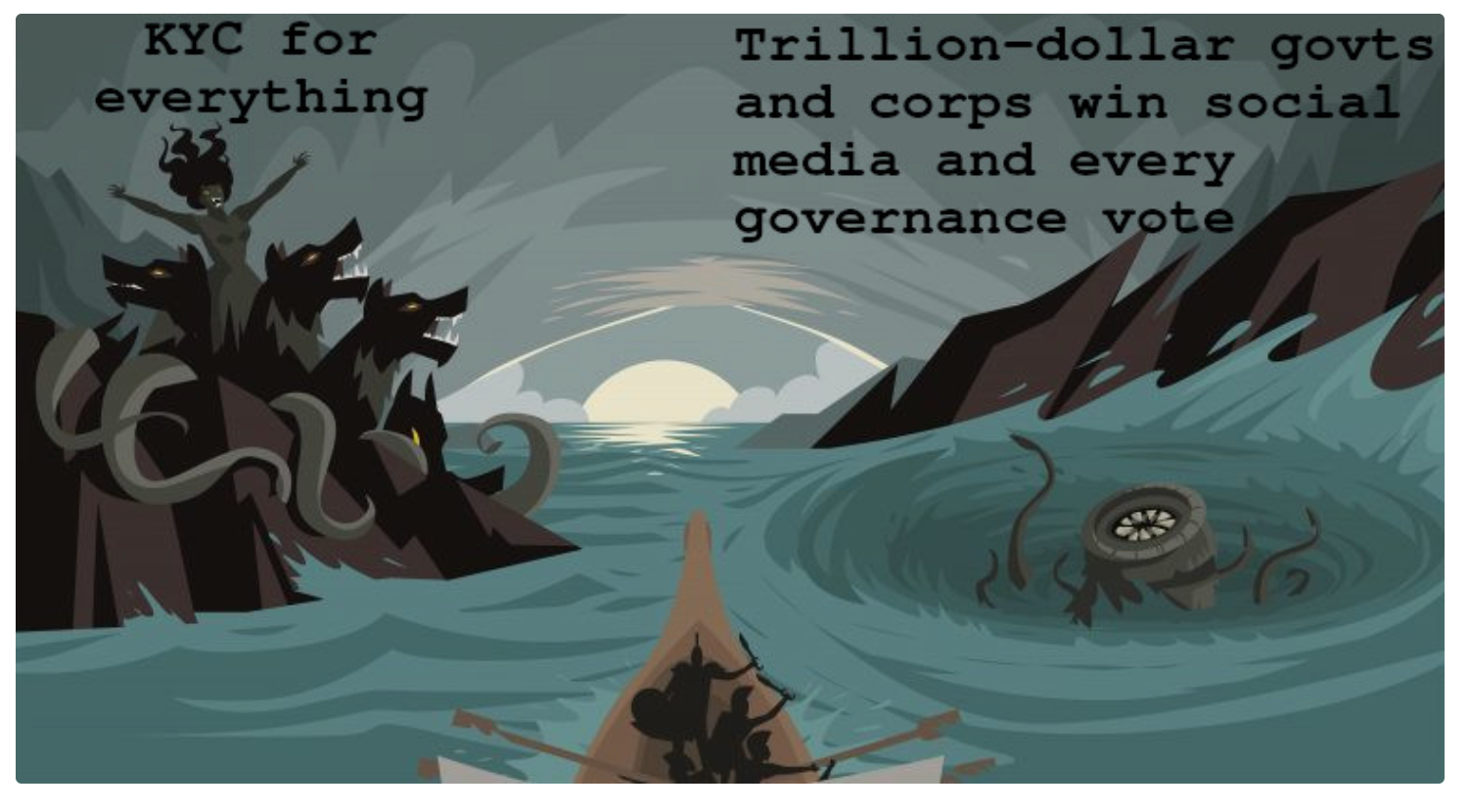

There are few personality proof supporters who see the dual risks we face.

In many personality proof projects—not only Worldcoin, but also other projects (Circle, BrightID, Idena)—the flagship application is a built-in N-per-person token (sometimes called UBI token). Each user registered in the system will receive a certain number of tokens every day (or every hour, or every week). But there are also many other applications:

- Airdrops of tokens

- Token or NFT sales, providing more favorable conditions for less wealthy users

- Voting in DAOs

- Approaches to developing reputation systems based on graphs

- Quadratic voting (as well as payment of funds and attention)

- Preventing bot/fake attacks in social media

- Alternative solutions to CAPTCHA to prevent DoS attacks

In many of these cases, the common goal is to establish open and democratic mechanisms to avoid centralized control by project operators and domination by the wealthiest users. The latter is particularly important in decentralized management. In many cases, existing solutions rely on a combination of the following two aspects:

- Highly opaque artificial intelligence algorithms that have significant room to discriminate against users that operators fundamentally dislike without detection;

- Centralized identity verification, also known as KYC.

An effective identity verification solution would be a better alternative that achieves the necessary security attributes for these applications without the drawbacks of existing centralized methods.

What early attempts were made to prove identity?

Identity verification primarily takes two forms: proof based on social graphs and proof based on biometrics.

Proof based on social graphs relies on some form of endorsement: if Alice, Bob, Charlie, and David are all verified humans and they all say that Emily is a verified human, then Emily is likely a verified human as well. Endorsements are typically reinforced through incentives: if Alice claims Emily is human but it turns out she is not, both Alice and Emily may face penalties. Biometric identity verification involves verifying certain physical or behavioral characteristics of Emily to distinguish between humans and robots (as well as differences among human individuals). Most projects combine the use of these two technologies.

The workings of the four systems I mentioned at the beginning of the article are roughly as follows:

- Identity Proof: Upload a video of yourself and provide a deposit. To get approved, you need an existing user to endorse you and a certain amount of time during which others can challenge you. If there’s a challenge, Kleros, a decentralized court, will determine the authenticity of your video. If it’s deemed fake, you will lose your deposit and the challenger will be rewarded.

- BrightID: Participate in video call verification parties with other users, where everyone verifies each other. More advanced verification can be achieved through Bitu, where if enough Bitu-verified users vouch for you, you can pass the verification.

- Idena: Play a captcha game at a specific point in time (to prevent multiple participation); part of the captcha game involves creating and verifying captchas, and then using those captchas to verify others.

- Circles: Have existing Circles users endorse you. What sets Circles apart is that it doesn’t aim to create a globally verifiable ID; instead, it creates a trust graph where the trustworthiness of someone can only be verified based on their position in the graph.

Every Worldcoin user will install an application on their phone that generates a private and public key, similar to an Ethereum wallet. Then, they personally visit an Orb. The user stares into the Orb’s camera while showing the Orb a QR code generated by the Worldcoin application, which contains their public key. The Orb scans the user’s eyes and uses complex hardware scanning and machine learning classifiers to verify two things:

1) The user is a real person

2) The user’s iris is not consistent with any other user who has previously used the system

If both scans pass, Orb will sign a message approving the specific hash value of the user’s iris scan. The hash value will be uploaded to a database (currently a central server), and once the hash value mechanism is proven effective, it will be replaced by a decentralized on-chain system. The system does not store the complete iris scan results, only the hash values, which are used to check uniqueness. From that point on, the user has a World ID.

World ID holders can prove themselves to be unique individuals by generating ZK-SNARKs, which prove that they possess the private key corresponding to the public key in the database without revealing the key they hold. Therefore, even if someone rescans your iris, they cannot see any of your operations.

What are the main issues with Worldcoin’s construction?

Four major risks:

l Privacy. The iris scan registry may leak information. If someone else scans your iris, they can cross-reference it with the database to determine if you have a World ID. Iris scanning may reveal more information.

l Accessibility. It is not possible for everyone to reliably access World IDs unless there are enough orbs.

l Centralization. Orb is a hardware device, and we cannot verify its construction or whether it has a backdoor. Therefore, even if the software layer is perfect and fully decentralized, the Worldcoin Foundation still has the ability to insert a backdoor into the system, allowing it to create numerous false human identities at will.

l Security. Users’ phones may be hacked, and users may be coerced to scan their irises while presenting someone else’s public key. It is also possible to create fake individuals using 3D printing and have them obtain a World ID through iris scanning.

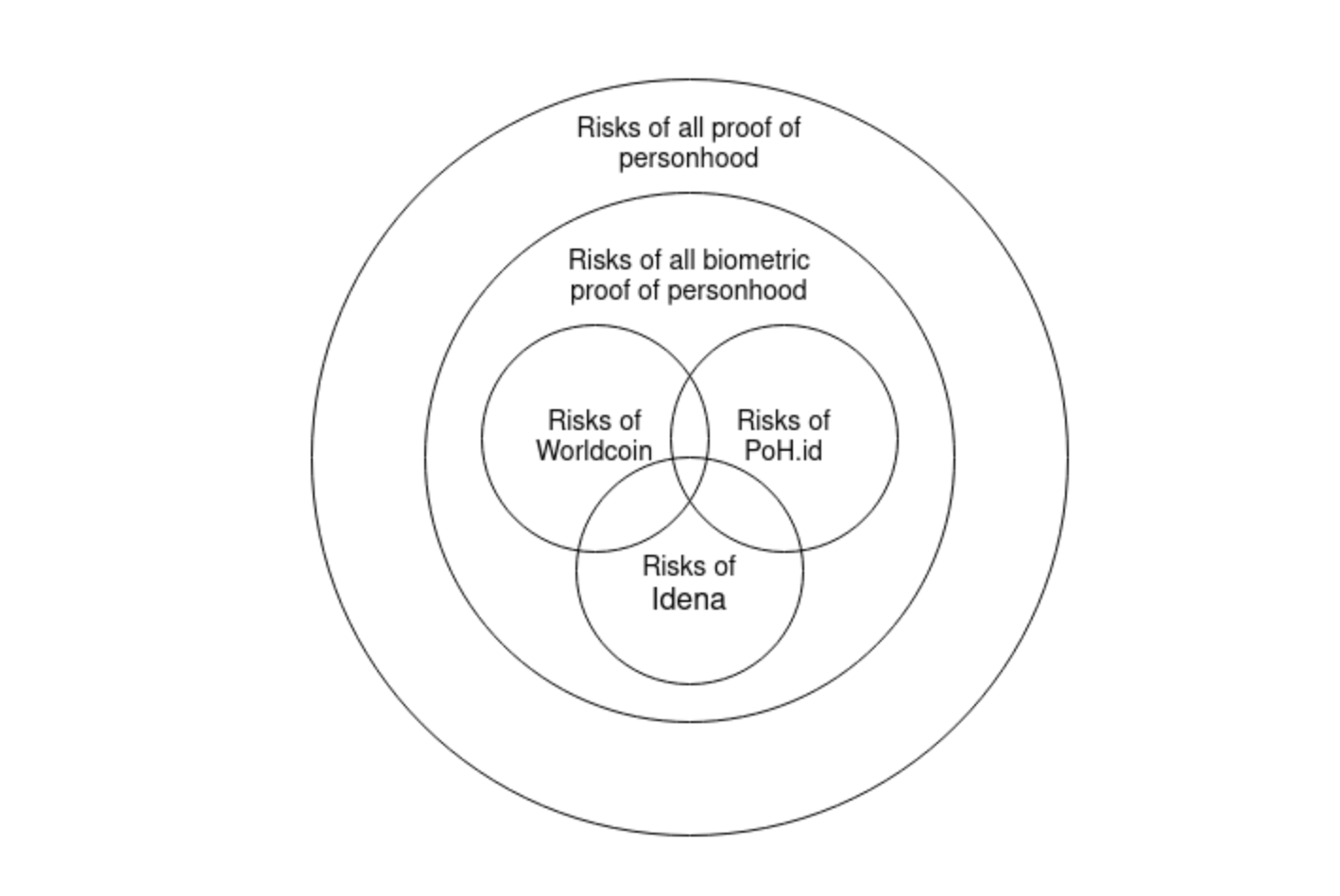

It is important to distinguish between (i) problems unique to Worldcoin’s choices, (ii) problems inherent in any biometric identity proof, and (iii) problems inherent in any general identity proof.

For example, registering an identity proof means publicly disclosing your face on the Internet. Joining a BrightID verification party, while not fully disclosing your face, still exposes your identity to many people. Joining Circles also exposes your social graph.

In comparison, Worldcoin is much better at protecting privacy. On the other hand, Worldcoin relies on specialized hardware, which presents the challenge of trusting the orb manufacturer to correctly manufacture the orbs – a challenge that does not exist in identity proofs, BrightID, or Circles. It is even conceivable that in the future, in addition to Worldcoin, other people will create different specialized hardware solutions and make different trade-offs.

How does a biometric identity proof scheme address privacy concerns?

The most obvious and potentially largest privacy leak of any individual identity proof system is linking every action of a person to their real-world identity. This data leak is so severe that it can be considered unacceptable, but fortunately, zero-knowledge proof technology easily solves this problem.

Users do not need to sign with their private key directly (the corresponding public key is stored in the database). Instead, they can use ZK-SNARK to prove that they have the private key, while the corresponding public key is stored somewhere in the database, without revealing the specific private key they possess. This can be achieved through tools like Sismo (for the specific implementation of proof of personhood, see here), and Worldcoin has its own built-in implementation. Here, it is necessary to give credit to the encryption-native proof of personhood: they do value taking this fundamental step to provide anonymity, which is something that almost all centralized identity solutions fail to do.

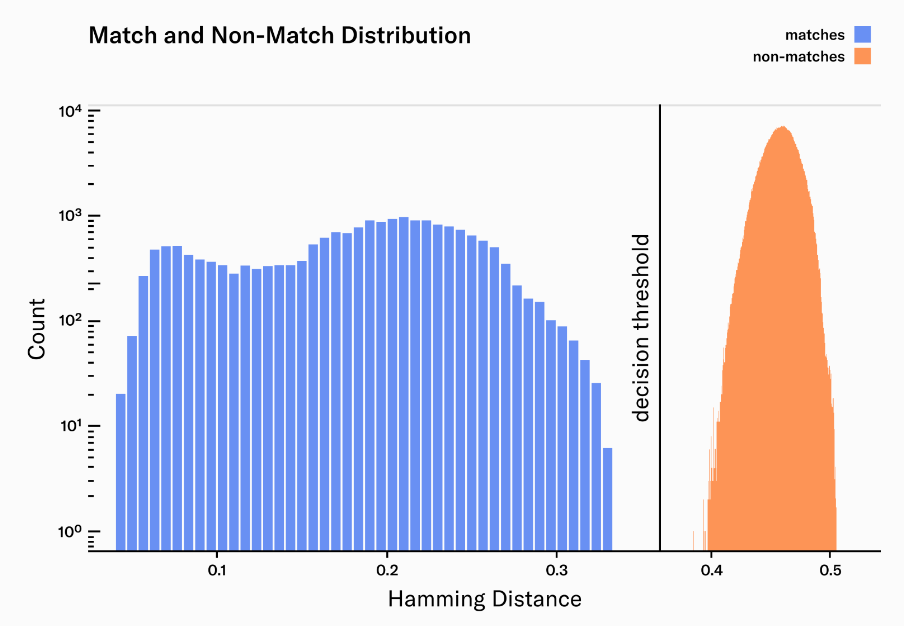

A more subtle but still important privacy concern is the public registration of biometric scans. In terms of proof of personhood, this means a large amount of data: you would have videos of every proof of personhood participant, making it clear to anyone in the world who wants to investigate proof of personhood participants. In Worldcoin, the leaked data is much more limited: Orb only locally computes and publishes the hash value of each person’s iris scan. This hash value is not a regular hash value like SHA256, but a specialized algorithm based on machine learning Gabor filters, designed to handle the inherent inaccuracies in any biometric scan and ensure that consecutive hash values for the same person’s iris have similar outputs.

Blue: the percentage of bits that differ between two iris scan results of the same person. Orange: the percentage of bits that differ between two iris scan results of different people.

These iris hash values only leak a small amount of data. If an adversary can forcibly (or secretly) scan your iris, they can calculate the hash value themselves and compare it with the iris hash value database to determine if you have participated in the system. This check of whether someone is registered is necessary for the system itself, to prevent people from registering multiple times, but it is always susceptible to abuse.

In addition, iris hash values have the potential to leak a certain amount of medical data (including gender, race, and possibly medical conditions), but this leakage is much smaller than what can be captured by almost all other large-scale data collection systems currently in use (for example, even street cameras).

Overall, in my opinion, storing iris hash values is sufficient to protect privacy. If others disagree with this judgment and decide to design a system with stronger privacy, there are two methods to achieve this:

1) If the iris hashing algorithm can be improved to greatly reduce the differences between two scans of the same person (e.g., reliable bit flips below 10%), the system can store fewer error correction bits for iris hashing instead of storing the complete iris hash (see: fuzzy extractor). If the difference between two scans is below 10%, the number of bits that need to be disclosed will be reduced by at least 5 times.

2) If we want to go further, we can store the iris hash database in a multi-party computation (MPC) system that can only be accessed by Orb (with rate limits), making the data completely inaccessible. However, the cost is the complexity of managing the protocols and the social complexity of the participants in multi-party computation. The benefit of doing this is that even if users want to, they cannot prove the connection between two different world IDs they have at different times.

Unfortunately, these techniques are not applicable to identity proofing because identity proofing requires the public disclosure of complete videos of each participant, so that doubts can be raised in case of signs of fake videos (including AI-generated fake videos) and further investigations can be conducted in those cases.

In general, although staring at Orb and allowing it to deeply scan your eyes may give a utopian feeling, it seems that specialized hardware systems can indeed perform well in protecting privacy. However, this also indicates on the other hand that specialized hardware systems bring greater centralization issues. Therefore, it seems that we have fallen into a dilemma: we must balance between one set of values and another set of values.

What are the accessibility issues with biometric identity proofing systems?

Specialized hardware brings accessibility issues because it is not very convenient to use. Currently, about 51% to 64% of people in sub-Saharan Africa have smartphones, and by 2030, this proportion is expected to increase to 87%. However, although there are billions of smartphones globally, there are only a few hundred Orbs. Even with larger-scale distributed manufacturing, it would be difficult to achieve a world where everyone has an Orb within a five-kilometer radius.

It is worth noting that many other forms of identity proofing have even more serious accessibility issues. It is difficult to join a social graph-based identity proofing system unless you already know someone in the social graph. This makes such systems easily limited to a single community within a single country.

Even centralized identity verification systems have learned from this lesson: India’s Aadhaar ID system is based on biometric technology because only in this way can it quickly absorb a large population (thus saving a lot of costs) while avoiding a large number of duplicates and fraudulent accounts. Of course, the Aadhaar system as a whole is much weaker in terms of privacy protection than any large-scale proposals put forward by the cryptocurrency community.

From the perspective of accessibility, the best-performing systems are actually systems like identity proofing, where you only need to use a smartphone to register.

What are the centralization issues with biometric identity proofing systems?

1. Centralization risks in the top-level management of the system (especially if there are subjective differences among different participants in making high-level decisions by the system).

2. Centralization risks are unique to systems that use specialized hardware.

3. If a proprietary algorithm is used to determine who the real participants are, there is a centralized risk.

Any identity proof system must contend with the first point, unless it is a completely subjective system of accepted ID sets. If a system uses an incentive mechanism valued in external assets (such as Ethereum, USDC, DAI), it cannot be completely subjective, and therefore governance risk becomes inevitable.

The second risk is much larger for Worldcoin than for identity proof (or BrightID) because Worldcoin relies on specialized hardware, while other systems do not.

The third risk is particularly present in logically centralized systems, unless all algorithms are open-source and we can ensure that they are indeed running the code they claim, verification by a single system is particularly risky. For systems that rely solely on user verification of other users (such as identity proof), this is not a risk.

How does Worldcoin solve the problem of hardware centralization?

Currently, a Worldcoin subsidiary called Tools for Humanity is the only organization manufacturing orbs. However, most of the Orb’s source code is open: you can see the hardware specifications in this GitHub repository, and other parts of the source code are expected to be released soon. The license is another form of shared source code, but it is not open source until four years later, similar to UniswapBSL, which prevents forks and also prevents what they consider unethical behavior—they specifically list large-scale surveillance and three international declarations of human rights.

The established goal of this team is to allow and encourage other organizations to create orbs, and over time transition from orbs created by Tools for Humanity to orbs created by organizations approved and managed by some kind of DAO recognized by the system.

This design has a problem:

1) Due to common pitfalls in joint agreements, it may ultimately fail to truly achieve decentralization: over time, one manufacturer may come to dominate in practice, leading to a return to centralization. While governance bodies can limit how many valid orbs each manufacturer can produce, this requires careful management and puts significant pressure on the governance bodies to both decentralize and monitor the ecosystem and effectively respond to threats. This is much more difficult than a static DAO that only deals with top-level dispute resolution tasks.

2) Ensuring the security of this distributed manufacturing mechanism is essentially impossible, and there are two risks:

Very weak resistance to malicious orb manufacturers: even if only one orb manufacturer is malicious or gets hacked, they can generate an unlimited number of fake iris scan hashes and provide them with World IDs.

Government restrictions on orbs: governments that do not want their citizens to participate in the Worldcoin ecosystem can ban orbs from entering their country. Additionally, they can even force citizens to undergo iris scans, allowing the government to access their accounts, and citizens will have no recourse.

In order to make the system more resistant to attacks from malicious Orb manufacturers, the Worldcoin team proposes regular audits of the Orb to verify the correctness of the manufacturing process, whether key hardware components are manufactured according to specifications, and whether they have been tampered with afterwards. This is a challenging task: it is essentially similar to the nuclear inspections carried out by the International Atomic Energy Agency (IAEA), but targeted at the Orb. We hope that even in the case of imperfect implementation of the auditing system, the number of fake Orbs can be greatly reduced.

In order to prevent any malicious Orbs from slipping through and causing harm to the system, it is necessary to take a second mitigation measure. That is, World IDs registered with different Orb manufacturers can effectively distinguish Orbs. If this information is private and only stored on the devices of World ID holders, there is no problem, but it does require verification when necessary. In this way, the ecosystem will be able to withstand (inevitable) attacks, remove individual Orb manufacturers or even individual Orbs from the whitelist as needed. For example, if we find that the North Korean government is forcing people to scan their eyeballs everywhere, these Orbs and any accounts generated by them will be immediately traced and disabled.

Security issues commonly found in proof-of-personhood

In addition to the specific issues brought about by the Worldcoin system, there are also some issues that affect general proof-of-personhood designs. The main problems I can think of are as follows:

- Fake humans created through 3D printing: People can use artificial intelligence to generate photos of fake humans or even 3D print fake humans, whose credibility is sufficient for the Orb software to accept. As long as there are groups doing this, they can generate an unlimited number of identities.

- Selling IDs: Someone can provide someone else’s public key instead of their own when registering, allowing others to control the registered ID for money. This situation seems to have already occurred, and in addition to selling, there may also be cases of renting IDs.

- Hacking into a phone: If a person’s phone is hacked, the hacker can steal the key to control their World ID.

- Government coercion to steal identification documents: Governments can force citizens to verify themselves when presenting government-owned QR codes. This way, malicious governments can obtain millions of identification documents. In the case of biometric systems, this can even be done covertly: the government can use disguised Orbs to extract World IDs from each person entering the country when verifying passports at customs.

(1) is a problem unique to biometric proof-of-personhood systems, and (2) and (3) are common to both biometric and non-biometric designs. Point (4) is also a common point between the two. Although the required technology is very different in these two cases, in this section, I will focus on the issues in the biometric scenario.

These are all quite serious problems, some of which have been properly addressed in existing protocols, some of which can be eliminated through future improvements, and some of which seem to be fundamental limitations.

How to deal with the problems brought by 3D printed avatars?

For Worldcoin, the risk is much smaller compared to systems like Proof of Personhood: it is difficult to forge many characteristics of a person when face scanning can check many features, compared to carefully fabricated videos. Professional hardware itself is harder to deceive than ordinary hardware, and ordinary hardware is harder to deceive than digital algorithms that verify remotely sent images and videos.

Will someone eventually be able to 3D print something that can deceive even dedicated hardware? It’s possible. I expect that at some point in the future, the contradiction between openness and security will become greater: open-source artificial intelligence algorithms are inherently more susceptible to adversarial machine learning. Black box algorithms are more protected, but it is difficult to ensure that malicious confidential information is not added during the training process of black box algorithms. Perhaps, in the future, ZK-ML technology can achieve the best of both worlds, but from another perspective, even the best artificial intelligence algorithms can be deceived by the best 3D printed avatars.

How to prevent the sale of IDs?

In the short term, it is difficult to prevent the leakage of IDs because most people in the world do not even know about identity proof protocols. If you tell them to lift a QR code and scan their eyes to get $30, they will definitely do it. Once more people know what identity proof protocols are, a fairly simple mitigation measure becomes possible, which is to allow people with registered IDs to re-register and cancel their previous IDs. This greatly reduces the credibility of selling IDs because the person who sells the ID to you can directly go and re-register, canceling the ID that was just sold to you. However, in order to achieve this, the protocol must be widely known and Orb must be easily accessible to make on-demand registration a reality.

This is also one of the reasons why integrating UBI coins into the Proof of Personhood system is valuable: UBI Coin provides an easily understood incentive mechanism. First, it allows people to understand the protocol and register. Second, if they register on behalf of others, it immediately triggers the re-registration mechanism, and re-registration can also effectively collect evidence of being hacked.

Can we prevent coercion in biometric identity proof systems?

It depends on the type of coercion we are talking about. Possible forms of coercion include:

- Government scanning people’s eyes (or faces, etc.) at border controls and other routine government checkpoints to register citizens and frequently re-register them.

- Government banning the use of Orb domestically to prevent individuals from independently re-registering.

- Individuals purchasing IDs and then threatening others that if their own ID becomes invalid due to re-registration, they will harm the person who re-registers.

- Applications requiring people to directly sign in with their public key and show them corresponding biometric scans, thus revealing the connection between the user’s current ID and any future IDs obtained through re-registration. People are generally concerned that this would create a permanent record accompanying them throughout their lives.

It seems quite difficult to completely prevent these situations, especially in the hands of inexperienced users. Users can leave their own countries and (re)register on a safer country Orb, but this is a difficult and costly process. In really hostile legal environments, it is too difficult and risky to find an independent Orb.

A feasible solution is to make such abusive behavior more difficult to implement and detect. Proof of personhood, which requires users to say a specific phrase during registration, is a good example: this may be enough to prevent covert scanning, but it requires coercion to be more open and the registration phrase can even include a statement confirming that the interviewee knows they have the right to independently re-register and may receive UBI Coin or other rewards. If coercive behavior is detected, the devices used to carry out mass coercive registration may have their access revoked. To prevent applications from linking people’s current and past IDs and attempting to leave permanent records, default identity verification applications can lock users’ keys in trusted hardware, preventing any application from directly using the key without an anonymous ZK-SNARK layer. If governments or application developers want to bypass this, they would need to force the use of their own custom applications.

Combining these technologies with vigilance against ID abuse, it seems possible to lock down truly hostile regimes and keep those that are merely mediocre (which is the case in many parts of the world) honest. This can be achieved by maintaining their own bureaucratic institutions, such as projects like Worldcoin or proof of personhood, or by disclosing more information about how IDs are registered (for example, in Worldcoin, which Orb they come from) and leaving this categorization task to the community.

How to prevent ID rental (such as vote selling)?

Re-registration does not prevent ID rental. This is not a problem in some applications: the cost of renting the right to receive UBI Coin on a given day will simply be the value of UBI Coin on that day. However, in applications such as community voting, vote selling is a major issue.

Systems like MACI can prevent you from selling your vote in a credible way, allowing you to vote again afterwards, invalidating your previous vote, so that no one can know if you actually cast such a vote. However, if someone with malicious intent controls the key you obtained during registration, this will be ineffective.

I believe there are two solutions:

- Running the entire application within MPC: This also covers the re-registration process, where when a person registers with MPC, MPC assigns them an ID that is independent of their proof of personhood ID and cannot be linked to it. When a person re-registers, only MPC knows which account to deactivate. This prevents users from proving their own actions, as every important step is completed within MPC using private information known only to MPC.

- Distributed registration ceremony: Decentralized registration ceremony. Essentially, implementing a protocol similar to this where face-to-face key registration is required, with four randomly selected local participants completing the registration together. This ensures that the registration is a trusted process and attackers cannot eavesdrop during the registration process.

In fact, systems based on social graph may perform better in this regard, as they can automatically create a distributed registration process stored locally, which is a byproduct of how they work.

Biometric Technology vs. Social Graph-based Verification

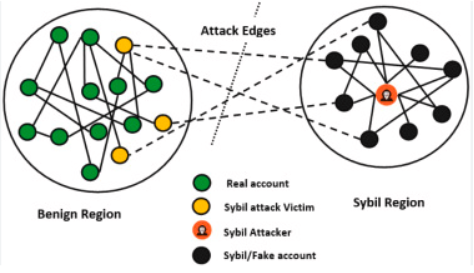

In addition to biometric methods, another major competitor in proving personal identity is social graph-based verification. Social graph-based verification systems are based on the same principle: if a large number of existing verified identities have proven the validity of your identity, then that validity stands, and you should also be granted verified identity.

If only a few real users (accidentally or maliciously) verify fake users, basic graph theory techniques can be used to set an upper limit on the number of fake users verified by the system.

Supporters of social graph-based verification often describe it as a better alternative to biometric technology for the following reasons:

- It does not rely on specialized hardware, making it easier to deploy.

- It avoids the long-term arms race between 3D human manufacturer and Orb.

- It does not require the collection of biometric data, which is more privacy-friendly.

- It may be more friendly to anonymity because if someone chooses to divide their internet life into multiple independent identities, both of these identities may be verified (but maintaining multiple real and independent identities sacrifices network effects and is costly, so attackers are unlikely to do so).

- Biometric methods give a binary score of “is human” or “is not human,” which is fragile because people carelessly rejected may ultimately be unable to obtain UBI and may be unable to participate in internet life. Social graph-based methods can provide a more nuanced numerical score, which may be slightly unfair to some participants, but is unlikely to completely exclude someone from participating in internet life.

Regarding these arguments, my view is generally in agreement. These are the true advantages of social graph-based methods and should be taken seriously. However, social graph-based methods also have their shortcomings, which are also worth considering:

- Initial social connections: To join a social graph-based system, users must know someone in the graph. This presents difficulties for large-scale applications and may potentially exclude entire regions that have had bad luck in the initial referral process.

- Privacy: Although social graph-based methods can avoid collecting biometric data, they often end up revealing a person’s social relationship information, which may lead to greater risks. Of course, zero-knowledge technology can mitigate this issue (for example, see the proposal by Barry Whitehat), but the inherent interdependence of the graph and the need for mathematical analysis of the graph make it difficult to achieve the same level of data hiding as biometric technology.

- Inequality: Each person can only have one biometric ID, but a social node can generate many IDs based on their relationships. Fundamentally, social graph-based systems can flexibly provide multiple pseudonyms to people who truly need this feature (such as event organizers), which may also mean that individuals with more power and wider social connections can obtain more pseudonyms than those with less power and fewer social connections.

- Risk of centralization: Most people are not willing to spend time reporting to internet applications who is a real person and who is not. Therefore, over time, the system may tend to rely on easy entry methods provided by central authorities, and the social graph of system users will effectively become the social graph recognized by countries as citizens – providing us with centralized KYC, but adding unnecessary additional steps.

Are Persona Proofs Compatible with Pseudonyms in the Real World?

In principle, persona proofs can be compatible with various pseudonyms. The application can be designed in such a way that a person with an identity proof can create up to five profiles in the program, leaving space for pseudonymous accounts. They can even use quadratic formulas to calculate the cost of N accounts as N² dollars. But would they actually do it?

However, pessimists may argue that attempting to create a more privacy-friendly form of identification and hoping that it will be adopted in the right way is unrealistic because those in power do not care about the privacy and security of ordinary people. If a powerful person obtains a tool that can be used to gather more personal information, they will undoubtedly use it that way. In such a world, unfortunately, the only realistic approach is to prevent any identity solution from being implemented in order to defend a completely anonymous and highly trusted digital world.

I understand the rationality of this approach, but I am concerned that even if this approach succeeds, it will result in a world where no one can take any action to resist wealth centralization and governance centralization because one person can always impersonate ten thousand people. Conversely, this centralization can easily be controlled by those in power. Instead, I am more inclined towards a moderate approach, which is to strongly advocate for persona proof solutions that have strong privacy features and, if necessary, introduce a mechanism at the protocol layer that requires the cost of registering N accounts to be N² dollars, and create something that has privacy-friendly values and has the potential to be accepted by the external world.

So, in the absence of an ideal persona proof solution, I believe we have three different proof methods, each with its own unique advantages and disadvantages. The comparison chart is as follows:

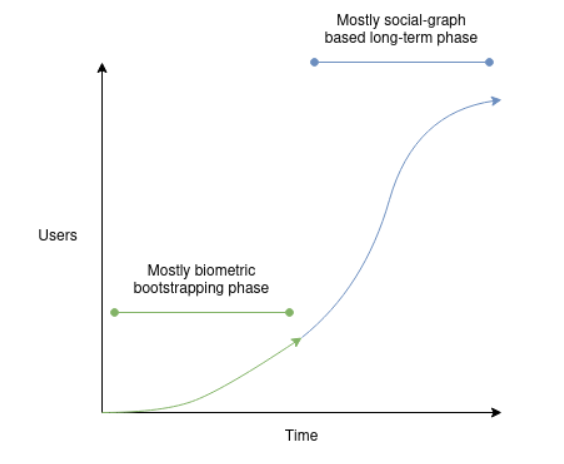

The ideal approach is to treat these three technologies as complementary and combine them together. As demonstrated by India’s Aadhaar, dedicated hardware biometric technologies have the advantage of large-scale security, but they are very weak in decentralization, although this can be addressed by holding individual Orbs accountable. Today, general biometric technologies have reached the level of large-scale application, but their security is rapidly declining, and their future usage expectations may only be 1-2 years. Systems based on social graphs can be launched with just a few hundred people closely related to the founding team, but it involves a constant trade-off between ignoring or adopting directly, which is vulnerable to attacks in many regions. However, a social graph-based system can truly make a difference if it originates from tens of millions of biometric ID holders. Biometric-guided approaches may be more effective in the short term, while social graph-based technologies may be more robust in the long term and have broader application prospects as algorithms improve.

A feasible hybrid solution

All teams are prone to making many mistakes, and there is inevitably tension between business interests and the broader community needs, so we must remain vigilant. As a community, we should push the boundaries of open-source technology to the comfort zone of all participants, subject third-party audits, write software, or take other checks and balances. We also need more alternative technologies in each of these three categories.

At the same time, we must also commend the work that has been accomplished: many teams running these systems have shown their commitment to privacy, much more seriously than any government or large enterprise-operated identity system, which is a wonderful quality that we should promote.

Building an effective and reliable identity verification system, especially one that is managed by people who are far from the encrypted community, seems quite challenging. I absolutely do not envy those who are trying to complete this task, as they are likely to take years to find a viable solution. In principle, even though there are risks in various implementation methods, the concept of personal identity verification is still highly valuable. At the same time, a world without any personal identity verification is still unable to avoid risks: a world without personal identity verification seems more likely to be dominated by centralized identity solutions, currencies, small closed communities, or some combination of the three. I look forward to seeing more progress in various types of personal identity verification and hope to see different approaches eventually converge into a coherent whole.

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!