Author: TY, Medium; Translation: Lynn, MarsBit

Introduction

As Ethereum has evolved from an experimental technology to a fully developed system that can provide an open, global, and permissionless experience for ordinary users, an important technical transformation needs to take place to move users to L2.

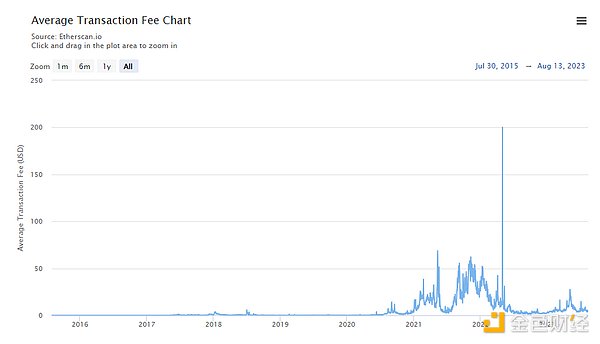

In order to achieve widespread adoption, Ethereum must be able to process millions of transactions per second. The scalability trilemma emphasizes the challenges of fully scaling Ethereum in terms of decentralization, security, and scalability. With transaction costs ranging from $4 to $200, Ethereum is not attractive to many users.

- Shima Capital CTO’s Reflection on Curve Why We Need Runtime Protection and Aspect

- Stanford Blockchain Club How does Lens Protocol build an open on-chain social graph?

- EigenLayer – The Most Ambitious Restaking Protocol of 2023

Source: Etherscan.io

Source: Etherscan.io

Addressing Ethereum’s scalability challenge through Rollup-centric approaches

In order to increase the capacity of the blockchain by packing more data and transactions into a block, trade-offs need to be made: this may require more powerful hardware to run nodes and may compromise decentralization. Another option is to build higher-level solutions based on Ethereum as the underlying layer, rather than introducing new blockchain features that may cause network disruptions.

Off-chain scaling solutions provide an indirect method to scale the main blockchain layer. They offload transaction computation to outside the Ethereum network, utilizing the main blockchain for trust and arbitration. This approach is known as Layer 2 scaling, adding an additional layer on top of Ethereum. Different methods such as state channels, sidechains, plasma, optimistic rollups, and validity proofs (commonly known as zk-rollups) fall under this category.

Rollups provide a versatile and general solution that even allows the Ethereum Virtual Machine (EVM) to run within it. This means that existing Ethereum smart contracts can be transferred to rollups with minimal code changes, while still benefiting from Ethereum’s security and data availability. Data computation happens off-chain and enough information is stored on-chain so that anyone can compute the complete internal state locally as needed, often used for fraud detection purposes.

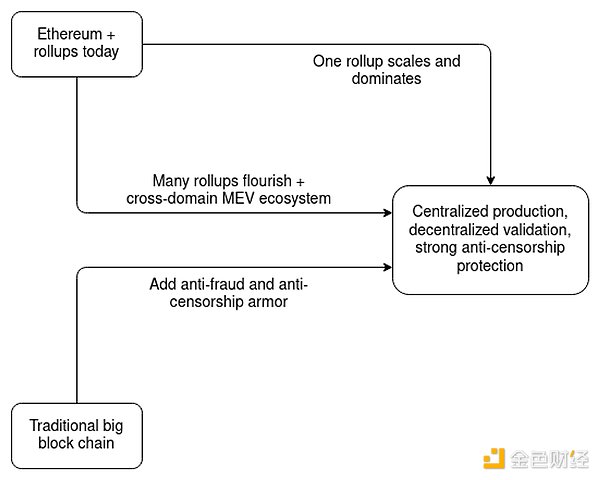

Each rollup requires specific contracts to be deployed on Ethereum. Transactions in Rollup are executed offline on a dedicated chain, and transaction data is batched and compressed before being submitted to Ethereum. This reduces the load on Ethereum’s computing resources, lowers costs, and achieves more scalable transaction processing. While rollups can scale Ethereum by processing more transactions and may involve some degree of centralized block production, as long as there is decentralized and trustless verification, there is still strong protection against censorship.

Source: Vitalik’s “Endgame”

Source: Vitalik’s “Endgame”

The landscape of Rollups today

Currently, the rollup environment in Ethereum includes optimistic and zero-knowledge solutions that pack batched transaction data as calling data onto Ethereum using advanced compression techniques. However, this approach comes with significant costs due to the large amount of data permanently stored in Ethereum’s transaction history.

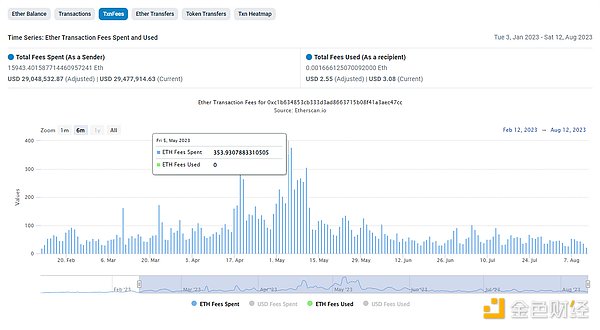

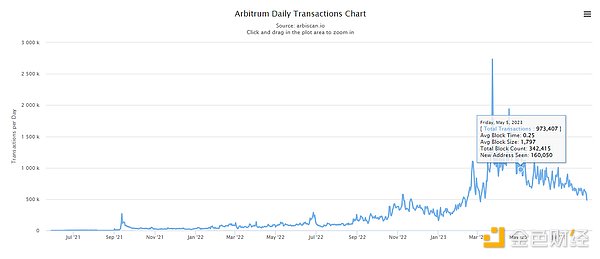

For example, on May 5, 2023, due to the sharp increase in Ethereum gas fees, Arbitrum’s batch transactions submitted to Ethereum required a large amount of gas (353.93 ETH), even though there were only 1,369 transactions on Ethereum, which is less than 1 million transactions. Transactions on Arbitrum.

Source: Etherscan.io – Arbitrum Batch Submitter

Source: Etherscan.io – Arbitrum Batch Submitter

Data source: Arbiscan.io

Data source: Arbiscan.io

Before the Bedrock upgrade on June 7, the Optimism sequencer averaged 3,000 transaction batches published to Ethereum every day. After the Bedrock upgrade, Optimism experienced a surge in transaction volume on the network, while the volume of transactions submitted to Ethereum decreased, indicating that each batch now contains a higher volume of transactions.

As Ethereum rollups become increasingly popular, with deployments on the mainnet such as Linea, Polygon zkEVM, and zkSync Era, and alternative L1 solutions like Celo and Fantom considering adopting rollups, it is clear that each solution will eventually face scalability bottlenecks related to Ethereum gas fees.

Currently, there are approximately 7,000 transaction batches submitted to Ethereum daily through aggregators such as zkSync Era, Linea, Arbitrum, Base, and Optimism. With the increasing attention and developer-friendliness of Ethereum L2 development, this number is expected to further increase.

Many projects have already been built on Ethereum using OP chains, including Coinbase, Debank, Mantle, Celo, Worldcoin, Zora Network, and Public Goods Network. In addition, many aggregator projects are accelerating the release of their own stacks to facilitate L2 (and L3) development.

Improving Aggregation Efficiency with EIP-4844

In the constantly evolving field of Ethereum’s aggregation-centered ecosystem, there is an increasing need to improve the scalability of aggregation on Ethereum while maintaining the security and availability of data. This goal revolves around a clever approach: avoiding the permanent storage of a large number of data blocks on Ethereum while still allowing users to compute the internal state when needed.

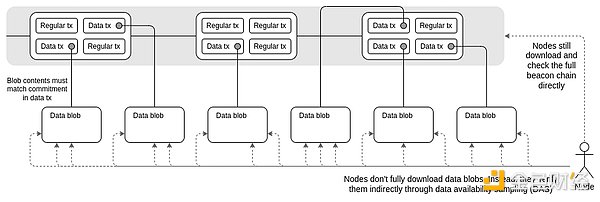

EIP-4844 introduces a new transaction type called Binary Large Objects (blob) and allocates an additional 6 blobs (each 128kb in size) of data space per block specifically for aggregation. Considering Ethereum’s average of 7,100 blocks per day, with an average of 3 blobs per block, this amounts to processing 21,300 blobs per day.

With this upgrade, the sequencer will format batch transaction data into blobs and store them in Ethereum’s memory pool. The validators will then merge these Blob transactions into blocks, and the Blobs will temporarily reside in the Ethereum consensus layer for up to three weeks. This significantly reduces the cost of aggregating data on Ethereum, as it does not store the data as call data. However, it also means that blob data cannot be directly retrieved through Ethereum’s execution layer.

Source: Original Danksharding FAQ

Source: Original Danksharding FAQ

In addition, this upgrade introduces a unique fee market for blob transactions, similar to the fee market design of EIP-1559. Imagine a busy burger shop that sets up a separate production line for its popular soft ice cream. Similarly, blobs will trade in their own designated fee market, effectively decoupling them from regular transactions. Therefore, for each block that uses more than half of the Blob space (minimum 384kb), the cost of Blob transactions will increase by 12.5%, and vice versa.

KZG plays a critical role in EIP-4844. These commitments serve as a form of zero-knowledge proof, facilitating efficient operations and verification of large data objects such as blobs. This process involves representing blobs as polynomials, allowing computers to evaluate blob properties without reading the entire blob transaction.

The KZG ceremony was launched in January 2023 and has received over 130,000 contributions at the time of writing. It involves a one-time trusted setup that combines the entropy of multiple contributions to generate a unique and non-reproducible value. This process ensures maximum security and integrity of KZG commitments.

Importantly, it is worth noting that while this upgrade does not directly increase Ethereum’s transaction capacity, it significantly reduces operational costs associated with aggregation. This enhanced functionality makes L2 more cost-efficient and provides secure data availability for aggregation.

Laying the necessary foundation for comprehensive danksharding

While EIP-4844 is initially expected to provide cost savings for blob transactions compared to regular transactions, it is important to consider the potential cost increase as the number of Ethereum aggregations grows. The ultimate goal of EIP-4844 is to merge up to 64 blobs into Ethereum without imposing excessive burdens on nodes during block validation. This is intended to make Ethereum an optimized Data Availability (DA) layer and gradually transition end-users to transact on aggregation rather than on Ethereum in the long run.

Implementing Data Availability Sampling (DAS) and erasure coding is necessary to achieve complete danksharding. DAS aims to ensure that ordered data has been published on the chain by verifying data availability through random selection of full nodes. The more data is sampled, the higher the confidence in complete data availability. When a malicious aggregator withholds a portion of blob data, erasure coding comes into play. By reconstructing lost data based on known fragments, erasure coding introduces redundancy, preventing attempts to withhold data.

Aspects of the Rollups ecosystem worth exploring

As the aggregation space expands, establishing decentralized fraud proofs and shared aggregators becomes crucial. Current aggregations typically operate in silos, focusing on attracting specific user groups, and they may overlook the importance of interoperation between L2s. Establishing cross-chain communication protocols between L2s will play a crucial role in providing users with a seamless trading experience across the wider Ethereum ecosystem. It is also interesting to observe the emergence of cross-chain MEV brought about by the development of these Rollups.

Although Ethereum aims to be the pinnacle of decentralized and secure data availability (DA) layers, existing decentralized DA services such as Eigenlayer and Celestia fulfill the requirements for aggregation DA. It will be interesting to see how the DA landscape continues to evolve to make the Ethereum ecosystem more efficient. However, it will take several years to complete the full Danish shard.

Concluding Thoughts

In order to further scale Ethereum through aggregation, it must transform into an optimized data availability layer to ensure security and attract new aggregators to establish and finalize. This must be achieved without requiring Ethereum to store aggregated data, as the rapid expansion of the aggregation landscape could put pressure on Ethereum nodes and lead to potential centralization effects. In order for Ethereum to effectively scale and handle the upcoming wave of adoption, advanced data manipulation and validation techniques must be adopted to meet the growing demand.

Given the continuous development of Ethereum’s aggregation capacity and functionality, the impact of EIP-4844 on reducing L2 costs remains to be observed. Furthermore, it is interesting to observe how this upgrade contributes to stimulating increased aggregation activity and unlocking the potential of related technologies.

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!