On June 10, OpenAI founder Sam Altman made his first speech to a Chinese audience at the 2023 Zhixuan Artificial Intelligence Conference held in Beijing via video link. In his speech, Altman quoted the Tao Te Ching, discussing cooperation among great powers and stating that AI security begins at home, and that countries must cooperate and coordinate with each other.

Altman then participated in a one-on-one Q&A with Zhang Hongjiang, the chairman of the Zhixuan Research Institute.

Dr. Zhang Hongjiang is currently the chairman of the Beijing Zhixuan Artificial Intelligence Research Institute and also serves as an independent director and consultant for multiple companies. He was previously an executive director and CEO of Kingsoft and CEO of Kingsoft Cloud. He was also a co-founder of Microsoft Research Asia and served as its vice president, as well as the chief technology officer of Microsoft’s Asia Pacific R&D Group (ARD) and the dean of Microsoft Asia Engineering Institute (ATC) and a Microsoft “Distinguished Scientist.”

Prior to joining Microsoft, Zhang Hongjiang was a manager at HP Labs in Silicon Valley and worked at the Institute of Systems Science at the National University of Singapore.

- Vitalik: Ethereum ecosystem needs three technological transitions

- Blockingradigm Introduces Flood: A Powerful Tool for Blockchain Node Load Testing

- Launching a value network through Sats’ micro-payment economic model

Core content of Altman’s speech

The reason why the AI revolution has such a huge impact is not only because of the scale of its impact, but also because of the speed of its progress. This brings both dividends and risks.

With the emergence of increasingly powerful AI systems, the importance of global cooperation has never been greater. In some important matters, countries must cooperate and coordinate with each other. Promoting AGI security is one of the most important areas where we need to find common interests.

Alignment is still an unresolved issue. GPT-4 took eight months to complete the alignment work. However, relevant research is still being upgraded, mainly in terms of scalability and interpretability.

Core content of the Q&A session

Within ten years, humans will have powerful AI systems.

OpenAI does not have a new timeline for open source. Open source models have advantages, but open sourcing everything may not be the best way to promote AI development.

It is much easier to understand neural networks than to understand the human brain.

At some point, we will try to make a GPT-5 model, but it won’t be very soon. I don’t know exactly when GPT-5 will appear.

Chinese researchers’ participation and contributions are needed for AI safety. Note: “AI alignment” is the most important problem in AI control, which requires that the goals of AI systems be aligned (consistent) with human values and interests.

Full text of Sam Altman’s speech

With the emergence of increasingly powerful artificial intelligence systems, the stakes for global cooperation have never been higher.

If we’re not careful, an AI system designed to improve public health outcomes could offer baseless recommendations, disrupting the entire healthcare system. Similarly, an AI system designed to optimize agricultural production could inadvertently deplete natural resources or damage ecosystems because of a lack of consideration for the long-term sustainability of factors that affect food production, which is a balance of the environment.

I hope we can all agree that pushing forward on AGI safety is one of the most important areas where we need to work together and find common ground.

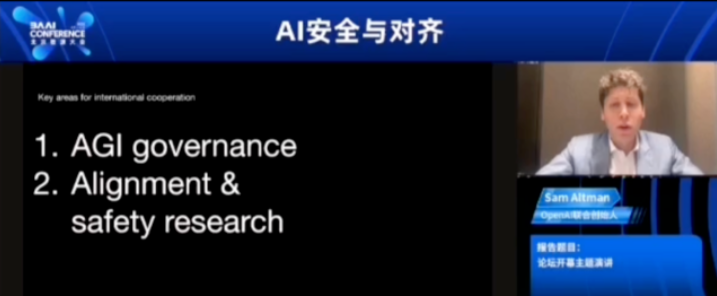

The rest of my remarks will focus on where we can start: 1. The first aspect is AGI governance, which fundamentally becomes a powerful force to change our civilization, emphasizing the need for meaningful international cooperation and coordination. Everyone benefits from cooperative governance approaches. If we steer this path safely and responsibly, AGI systems can create unparalleled economic prosperity for the global economy, address common challenges such as climate change and global health security, and improve social welfare.

I also strongly believe that we need to invest in AGI safety in the future to get where we want to go and enjoy it there.

To do this, we need to coordinate carefully. This is a globally impactful global technology. The costs of accidents caused by reckless development and deployment will affect all of us.

In international cooperation, I believe there are two key areas that are most important.

First, we need to establish international norms and standards, and pay attention to inclusiveness in the process. AGI systems should be equally and consistently guided by such international standards and norms when used in any country. Within these safety barriers, we believe that people have sufficient opportunities to make their own choices.

Second, we need international collaboration to establish trust in AI systems that are increasingly powerful in their safe development in verifiable ways. I don’t think this is an easy thing to do, and it requires a lot of sustained attention and investment.

The “Tao Te Ching” tells us: “A journey of a thousand miles begins with a single step.” We believe that the most constructive first step in this direction is to work with the international scientific and technological community.

It should be emphasized that we should increase transparency and knowledge sharing mechanisms in promoting technological progress. In terms of AGI security, researchers who discover new security issues should share their insights for greater benefit.

We need to think seriously about how to encourage this kind of norm while respecting and protecting intellectual property. If we do this, it will open new doors for us to deepen cooperation.

More broadly, we should invest in promoting and guiding research on AI alignment and security.

At Open AI, our research today focuses mainly on technical issues, making AI a helpful and safer role in our current systems. This may also mean training ChatGPT in a way that does not make violent threats or assist users in harmful activities.

However, as we approach the age of AGI, the potential impact and scale of unaligned AI systems will multiply. Actively addressing these challenges now can minimize the risk of catastrophic results in the future.

For current systems, we primarily use human feedback for reinforcement learning to train our models to become helpful security assistants. This is just one example of various post-training adjustment techniques. And we are also working hard to research new technologies that require a lot of hard engineering work.

From completing pre-training for GPT4 to deploying it, we spent a dedicated 8 months working on alignment. Overall, we think we did well in this regard. GPT4 is more aligned with humans than any model we’ve had before.

However, for more advanced systems, alignment is still an unsolved problem, which we believe requires new technological approaches as well as enhanced governance and oversight.

For future AGI systems, it proposes 100,000 lines of binary code. Human supervisors are unlikely to discover if such a model is doing something evil. So we are investing in some new, complementary research directions, hoping to achieve breakthroughs.

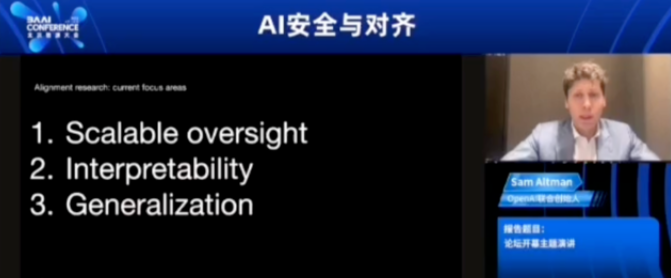

One is scalable supervision. We can try to use artificial intelligence systems to assist humans in supervising other artificial intelligence systems. For example, we can train a model to help humans supervise and detect defects in the output of other models.

The second is explanatory ability. We want to try to better understand what is happening inside these models. We recently published a paper using GPT-4 to explain neurons in GPT-2. In another paper, we used Model Internals to detect when a model is lying. We have a long way to go. We believe that advanced machine learning techniques can further improve our ability to explain.

Ultimately, our goal is to train AI systems to help with alignment research. The benefit of this approach is that it can scale with the speed of AI development.

Gaining the extraordinary benefits of AGI while reducing risk is one of the pioneering challenges of our time. We see great potential in researchers from China, the United States, and around the world working together to achieve a common goal and dedicated to addressing the technical challenges of AGI alignment.

If we do this, I believe we will be able to use AGI to solve the most important problems in the world and greatly improve the quality of life for humanity. Thank you very much.

Dialogue Record

We will have very powerful AI systems in the next ten years

Zhang Hongjiang, Director of the Intelligence Source Research Institute, asked: How far are we from general artificial intelligence (AGI)? Is the risk urgent, or are we still far away from it?

Sam Altman: It’s difficult to assess the specific time. It is very likely that we will have very powerful AI systems in the next ten years. The speed at which new technologies fundamentally change the world is faster than we imagine. In that world, I think it is important and urgent to do this (AI safety rules), which is why I call on the international community to work together.

In a sense, the acceleration of new technology and the impact of systems we are seeing now is unprecedented. So I think it’s important to be prepared for what’s coming and to understand security issues. Given the vast scale of AI, the interests at stake are quite significant.

China, the United States, and other countries, as well as Europe, are the driving forces behind AI innovation. In your view, what advantages do different countries have in addressing AI security issues, especially in the field of AGI security? How can these advantages be combined?

Global cooperation on AI safety standards and frameworks

Zhang Hongjiang: You mentioned several times in your introduction that global cooperation is under way. We know that in the past the world has faced quite a few crises. Somehow, for many of them, we managed to establish consensus and global cooperation. You are also on a global tour, and you are working hard to promote what kind of global cooperation?

Sam Altman: Yes, I am very satisfied with the response and answers from everyone so far. I think people take the risks and opportunities of AGI very seriously.

I think there has been considerable progress in the discussion of security over the past six months. People seem really committed to finding a structure that will allow us to enjoy these benefits while working together globally to reduce risk. I think we’re very well positioned to do this. Global cooperation is always difficult, but I think this is an opportunity and a threat to unite the world. We can propose a framework and safety standards for these systems, which will be very helpful.

How to solve the alignment problem of artificial intelligence

Zhang Hongjiang: You mentioned that the alignment of advanced AI is an unsolved problem. I also noticed that OpenAI has put in a lot of effort in the past few years. You mentioned that GPT-4 is the best example so far in the alignment field. Do you think we can solve the security problems of AGI simply by fine-tuning the API? Or is it much more difficult than that?

Sam Altman: I think there are different ways to understand the word alignment. I think we need to address challenges in the entire AI system, and traditional alignment – making the model’s behavior consistent with the user’s intent – is of course part of it.

But there are other questions, such as how do we verify what the system is doing, what we want them to do, and how we adjust the system’s value. Most importantly, we need to see the overall situation of AGI safety.

If there is no technical solution, everything else is difficult. I think it is very important to focus on ensuring that we solve the safety-related technical problems. As I mentioned, figuring out what our values are is not a technical problem. Although it requires technical input, it is a problem that is worth discussing deeply by society as a whole. We must design fair, representative, and inclusive systems.

Zhang Hongjiang: Regarding alignment, we see what GPT-4 is doing from a technical standpoint. But besides technology, there are many other factors, and they are often systemic. Artificial intelligence security may not be an exception here. Besides the technical aspects, what other factors and issues are there? Do you think they are crucial to artificial intelligence security? How do we deal with these challenges? Especially since most of us are scientists. What should we do?

Sam Altman: This is undoubtedly a very complex issue. But if there is no technical solution, everything else is difficult to solve. I think it is very important to focus on ensuring that we solve the safety-related technical problems. As I mentioned, figuring out what our values are is not a technical problem. It requires technical input, but it is also a problem that is worth discussing deeply by society as a whole. We must design fair, representative, and inclusive systems.

And, as you pointed out, we need to consider not just the security of the AI model itself, but the entire system.

Therefore, it is important to build secure classifiers and detectors that can run on the system, which can monitor whether the AI is complying with usage policies. I think it is difficult to predict all the problems that any technology will encounter in advance. Therefore, learn from real-world use and repeatedly deploy to see what happens when you really create reality and improve it.

It is also important to give humans and society time to learn and update, and to think about how these models will interact with their lives in good and bad ways.

Need for global cooperation

Zhang Hongjiang: Earlier, you mentioned global cooperation. You have been traveling around the world, and China, the United States, and Europe are all driving forces behind artificial intelligence innovation. In your opinion, in AGI, what are the advantages of different countries to solve AGI problems, especially to solve human safety issues? How to combine these advantages?

Sam Altman: I think AI safety generally requires a lot of different perspectives and inputs. We don’t have all the answers yet, and it’s a fairly difficult and important problem.

Additionally, as was mentioned, this is not purely a technical problem to make AI safe and beneficial. It involves understanding user preferences in very different contexts and countries. We need a lot of different inputs to achieve this goal. China has some of the best AI talent in the world, and fundamentally, I think the best minds from around the world are needed to consider the difficulty of aligning advanced AI systems. So I really hope that Chinese AI researchers make a huge contribution here.

Very different architectures needed to make AGI safer

Zhang Hongjiang: Regarding the follow-up question about GPT-4 and AI safety. Is it possible that we might need to change the entire underlying architecture or entire system architecture of the AGI model in order to make it safer and more easily inspected?

Sam Altman: Yes, we do need some very different architectures both from a functional standpoint and from a safety standpoint, and that’s totally possible.

I think we’ll be able to make some progress in explaining the capabilities of our current various models and making them better at explaining to us what they’re doing and why. But yes, if there’s another huge leap after the Transformer, I wouldn’t be surprised. We’ve changed a lot of architectures since the original Transformer.

Possibility of OpenAI open sourcing

Zhang Hongjiang : I understand that today’s forum is about AI safety, but because people are curious about OpenAI, I have a lot of questions about OpenAI, not just AI safety. Here’s a question from the audience: Does OpenAI have plans to re-open-source its models, like before version 3.0? I also think open sourcing is beneficial for AI safety.

Sam Altman: Some of our models are open source, some of them aren’t, but over time, I think you should expect us to continue to open source more models. I don’t have a specific model or timeline, but it’s something we’re currently discussing.

Zhang Hongjiang: BAAI turns all efforts into open source, including the models and algorithms themselves. We believe that we have this need to share and give, and you have a sense of control over them. Do you have similar ideas, or have you discussed these topics with your peers or colleagues at OpenAI?

Sam Altman: Yes, I do think that open source has a role to play to a certain extent.

Recently, there have been a lot of new open source models emerging. I think API models also have an important role to play. It gives us additional security controls. You can block certain uses. You can prevent certain types of fine-tuning. If something isn’t working, you can retract it. At the scale of the current models, I’m not too worried about this issue. But as the models get as powerful as we expect them to be, if we’re right about this, I don’t think open sourcing everything is necessarily the best path, although sometimes it is. I think we just need to balance it carefully.

We will have more open source large-scale models in the future, but there is no specific model or timetable.

What’s the next step for AGI? Will we see GPT-5 soon?

张宏江: As a researcher, I’m also curious about what the next step in AGI research will be. Will we see GPT-5 soon in large models and large language models? Is the next frontier in embodied models? Is autonomous robotics a field that OpenAI is exploring or preparing to explore?

Sam Altman: I’m also curious about what’s going to happen next. One of the reasons I love doing this work is because it’s at the cutting edge of research, and there are a lot of exciting and surprising things. We don’t have answers yet, so we’re exploring a lot of possible new paradigms. Of course, at some point, we’ll try to do a GPT-5 model, but it won’t be soon. We don’t know exactly when. We did some work in robotics when OpenAI first started, and we’re very interested in it, but we also ran into some difficulties. I hope that one day we can return to this field.

张宏江: That sounds great. You also mentioned in your talk how you used GPT-4 to explain how GPT-2 works, which made the model more secure. Is this approach scalable? Is this a direction that OpenAI will continue to pursue in the future?

Sam Altman: We will continue to pursue this direction.

张宏江: Do you think this approach can be applied to biological neurons? Because the reason I ask this question is that there are some biologists and neuroscientists who want to use this approach to study and explore how human neurons work in their field.

Sam Altman: It is much easier to observe what happens on artificial neurons than on biological neurons. So I think this approach is effective for artificial neural networks. I think using more powerful models to help us understand other models is feasible. But I’m not sure how you apply this method to the human brain.

Is it feasible to control the number of models?

Zhang Hongjiang: Okay, thank you. Since we’re talking about AI safety and AGI control, one issue we’ve been discussing is whether it would be safer if there were only three models in the world. This is like nuclear control, you don’t want nuclear weapons to proliferate. We have such treaties, we’re trying to control the number of countries that can get this technology. So is it feasible to control the number of models?

Sam Altman: I think there are different views on whether it’s safer to have a few models or many models in the world. I think what’s more important is whether we have a system that allows any powerful model to undergo sufficient safety testing. Do we have a framework that allows anyone who creates something powerful enough to have the resources and the responsibility to ensure that what they create is safe and aligned?

Zhang Hongjiang: Yesterday at this conference, Professor Max from the MIT Future of Life Institute mentioned a possible approach, similar to the way we control drug development. Scientists or companies develop new drugs, you can’t go directly to market. You have to go through this testing process. Is this something we can learn from?

Sam Altman: I absolutely think we can learn a lot from the licensing and testing frameworks that have emerged in different industries. But I think fundamentally, we already have some methods that can work.

Zhang Hongjiang: Thank you very much, Sam. Thank you for taking the time to attend this meeting, even though it’s online. I’m sure there are many more questions, but given the time, we have to stop here. I hope you have a chance to come to China, to Beijing, and we can have more in-depth discussions. Thank you very much.

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!