Source: IOSG Ventures

Over the past year, EigenLayer has released their whitepaper, completed a $50 million series A funding, and launched the first phase of their mainnet. During this period, the Ethereum community has engaged in extensive discussions surrounding EigenLayer and its use cases. This article will track and summarize these discussions.

Background

In the Ethereum ecosystem, some middleware services (such as oracles) do not completely rely on on-chain logic, and therefore cannot directly leverage Ethereum’s consensus and security. They need to bootstrap a trust network. The usual approach is for the project team to first operate the service, then introduce token incentives to attract participants and gradually achieve decentralization.

- a16z Founder Why is the emergence of blockchain so important when looking back at the history of Internet development?

- Polygon version of OP Stack is here? Polygon official interpretation of what is Polygon CDK.

- Aave V3 vs Compound V3 Quick Comparison

There are at least two challenges in doing so. First, introducing incentive mechanisms incurs additional costs: the participants have to purchase tokens for staking, which represents their opportunity cost, and the project team has to bear the operating costs to maintain the token value. Second, even if the above costs are incurred and a decentralized network is built, its security and sustainability are still unknown. These two points are particularly challenging for early-stage projects.

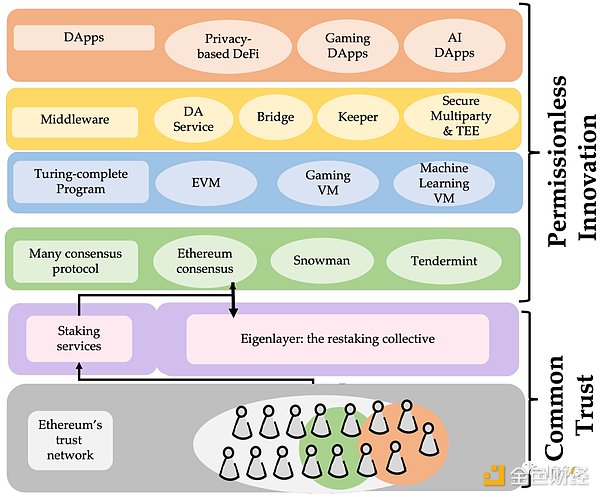

The idea of EigenLayer is to allow existing Ethereum validators to restake their tokens, thereby providing economic security for these middleware services, also known as Actively Validated Services (AVS). If these restakers work honestly, they can receive rewards, but if they act maliciously, their original Ethereum staking will be seized.

The benefits of this approach are twofold. First, the project team does not need to build a new trust network on their own; they can outsource it to Ethereum validators, thus minimizing capital costs. Second, the economic security of the Ethereum validator pool is very robust, which provides a certain level of security. From the perspective of Ethereum stakers, restaking provides them with additional income, as long as there is no subjective malicious intent, the overall risk is controllable.

Sreeram, the founder of EigenLayer, has mentioned three use cases and trust models of EigenLayer on Twitter and podcasts:

-

Economic Trust: Reusing Ethereum staking as an open position, staking higher value tokens means stronger economic security, as discussed above.

-

Decentralized Trust: Some services (such as secret sharing) may engage in malicious behavior that cannot be attributed, making it impossible to rely on confiscation mechanisms. It requires a sufficiently decentralized and independent group to do something in order to prevent collusion and conspiracy risks.

-

Ethereum Validator Commitments: Block producers stake their positions as collateral and make certain trusted commitments. We will list some examples in the following sections.

System Participants

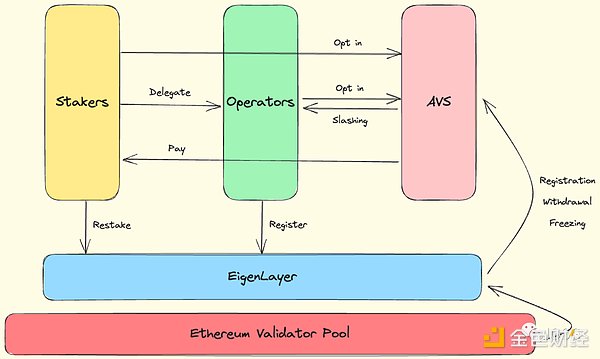

EigenLayer is an open market that connects the main participants of the three parties.

-

Re-stakers. If you have an Ethereum staking position, you can participate in re-staking by transferring your withdrawal credentials to EigenLayer, or simply depositing LST such as stETH. If the re-staker is unable to run an AVS node themselves, they can delegate their position to an operator.

-

Operators. Operators accept the delegation of re-stakers and run AVS nodes. They have the freedom to choose which AVS to provide services for. Once they provide services for an AVS, they need to accept the forfeiture rules defined by the AVS.

-

AVS. AVS, as the demand side/consumer, needs to pay the re-staker and obtain the economic security provided by them.

With these basic concepts in mind, let’s take a look at specific use cases of EigenLayer.

EigenDA

EigenDA is the flagship product launched by EigenLayer, which is based on the Ethereum scalability solution Danksharding. The data availability sampling (DAS) used in it is also widely applied in DA projects such as Celestia and Avail. In this chapter, we will give a quick introduction to DAS, and then explore the implementation and innovations of EigenDA.

-

DAS

As a preliminary solution to Danksharding, EIP-4844 introduces “Blob-carrying Transaction”, where each transaction carries an additional 125kb of data. In the context of data sharding and scalability, the additional data undoubtedly increases the burden on nodes. So, is there a way for nodes to download only a small portion of data and still verify the availability of all data?

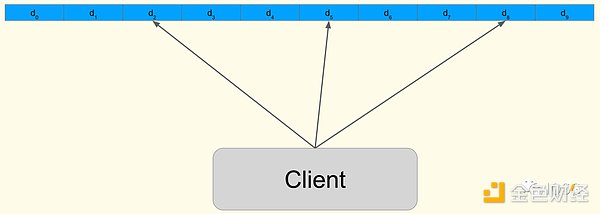

DAS addresses this by having nodes perform multiple random samplings on a small portion of data. Each successful sampling increases the node’s confidence in the availability of the data. Once it reaches a certain predefined level, the data is considered available. However, attackers can still hide a small portion of the data, so some form of fault tolerance mechanism is needed.

DAS utilizes erasure coding, where the data is divided into multiple blocks and encoded to generate additional redundant blocks. These redundant blocks contain partial information of the original data blocks, allowing for the recovery of lost data blocks when some blocks are lost or damaged. Thus, erasure coding provides redundancy and reliability for DAS.

In addition, it is necessary to verify whether the obtained redundant blocks are correctly encoded, as using incorrect redundant blocks would prevent the reconstruction of the original data. Danksharding utilizes KZG (Kate-Zaverucha-Goldberg) commitments for this purpose. KZG commitments are a method for verifying polynomials, proving that the values of a polynomial at specific positions match specified values.

The prover selects a polynomial p(x) and computes commitments for each data block using p(x), denoted as C1, C2, …, Cm. The commitments are published together with the data blocks. To verify the encoding, the verifier can randomly sample t points x1, x2, …, xt and request the prover to open the commitments at these points: p(x1), p(x2), …, p(xt). Using Lagrange interpolation, the verifier can reconstruct the polynomial p(x) from these t points. The verifier can now compute new commitments C1′, C2′, …, Cm’ using the reconstructed polynomial p(x) and the data blocks, and verify if they match the published commitments C1, C2, …, Cm.

In short, using KZG commitments, validators only need a small number of points to verify the correctness of the entire encoding. In this way, we obtain a complete DAS.

-

How

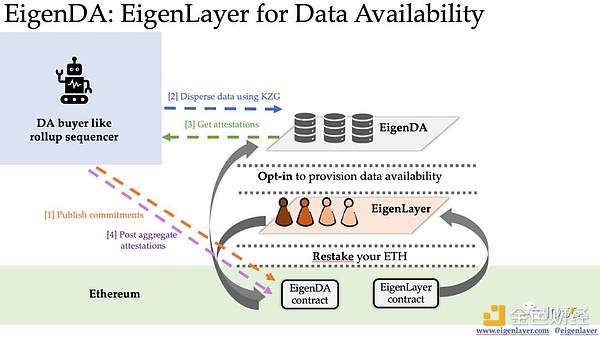

EigenLayer draws on the ideas of DAS and applies it to EigenDA.

1. First, the nodes of EigenDA re-stake and register in the EigenLayer contract.

2. Next, after the sequencer obtains the data, it divides the data into multiple blocks, generates redundant blocks using erasure codes, and calculates the KZG commitment for each data block. The sequencer publishes the KZG commitments one by one to the EigenDA contract as witnesses.

3. Then, the sequencer distributes the data blocks together with their KZG commitments to each EigenDA node. After receiving the KZG commitment, the node compares it with the KZG commitment on the EigenDA contract, stores the data block after confirming its correctness, and signs it.

4. The sequencer then collects these signatures, generates an aggregate signature, and publishes it to the EigenDA contract for verification. After the signature verification is successful, the entire process is completed.

In the above process, since EigenDA nodes only claim to have stored the data blocks through signatures, we need a way to ensure that EigenDA nodes are not lying. EigenDA adopts Proof of Custody.

The idea of Proof of Custody is to place a “bomb” in the data, and once a node signs it, it will be forfeited. To implement Proof of Custody, the following need to be designed: a secret value to differentiate different DA nodes and prevent cheating; a function specific to DA nodes, which takes DA data and the secret value as input and outputs whether there is a bomb. If the node does not store the complete data it should, it cannot compute this function. Dankrad has shared more details about Proof of Custody on his blog.

If there are lazy nodes, anyone can submit proof to the EigenDA contract, and the contract will verify the proof. If the verification passes, the lazy nodes will be forfeited.

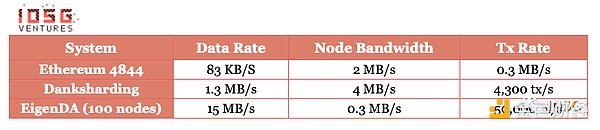

In terms of hardware requirements, computing KZG commitments for 32 MB of data within 1 second requires approximately 32-64 core CPUs, but this requirement only applies to the sequencer and does not impose a burden on EigenDA nodes. In the testnet of EigenDA, the throughput of 100 EigenDA nodes reaches 15 MB/s, and the bandwidth requirement for node downloads is only 0.3 MB/s (far below the requirements for running Ethereum validators).

In summary, we can see that EigenDA decouples data availability from consensus. The propagation of data blocks is no longer limited by the bottleneck of consensus protocols and low P2P network throughput. Because EigenDA is like hitching a ride on Ethereum’s consensus: the sequencer publishes KZG commitments and aggregate signatures, the smart contract verifies the signatures, and the process of forfeiting malicious nodes all happens on Ethereum, which provides consensus guarantee. Therefore, there is no need to bootstrap a trust network again.

-

Problems of DAS

Currently, DAS has some limitations as a technology itself. We need to assume that malicious adversaries will use various means to deceive lightweight nodes and make them accept false data. Sreeram made the following statement in his tweet.

In order for individual nodes to have a high enough probability of considering the data to be available, the following requirements need to be met:

-

Random sampling: Each node needs to independently and randomly select a set of samples for sampling, and the adversary does not know which samples were requested by whom. This way, the adversary cannot change their strategy to deceive the nodes accordingly.

-

Concurrent sampling: DAS needs to be conducted by multiple nodes simultaneously, so that the attacker cannot distinguish the sampling of one node from the sampling of other nodes.

-

Private IP sampling: This means using an anonymous IP for each data block queried. Otherwise, the adversary can identify the different nodes conducting the sampling and selectively provide the parts of data that they have already queried to the nodes, while not providing other parts of the data.

We can have multiple lightweight nodes perform random sampling to meet concurrency and randomness, but there is currently no good method to achieve private IP sampling. Therefore, there still exist attack vectors against DAS, making DAS currently provide only weak guarantees. These issues are actively being addressed.

EigenLayer & MEV

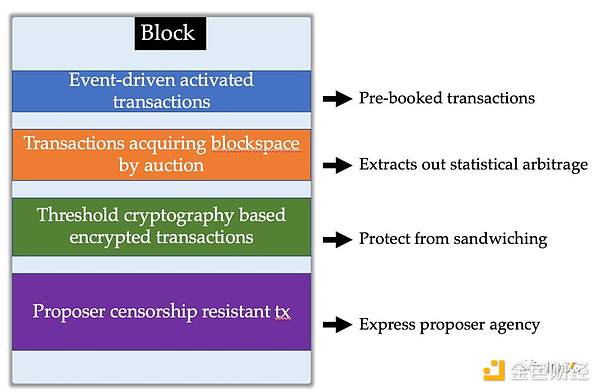

Sreeram discussed the application of EigenLayer in the MEV stack at the MEVconomics Summit. Based on the cryptographic economic primitives of staking and slashing, proposers can achieve the following four features, which are also referred to as the third point mentioned above – validator commitment use cases.

Event-driven Activation

Protocols like Gelato can react to specific on-chain events. They continuously listen for on-chain events and trigger predefined actions once an event occurs. These tasks are typically completed by third-party listeners/executors.

The reason they are called “third-party” is that there is no direct connection between the listener/executor and the proposer who actually processes the block space. Suppose a listener/executor triggers a transaction but it is not included in a block by the proposer for some reason. This cannot be attributed, thus no economic guarantee can be provided with certainty.

If this service is provided by the participating proposers in re-staking, they can make trusted commitments to the triggering of actions, and if these transactions are ultimately not included in a block, the proposers will be slashed. This provides stronger guarantees compared to third-party listeners/executors.

In practical applications (such as lending protocols), one of the purposes of setting a higher collateralization ratio is to cover price fluctuations over a certain time period. This is related to the time window before liquidation, and a higher collateralization ratio means a longer buffer period. If a majority of transactions adopt an event-driven reaction strategy and have the strong guarantee provided by proposers, then (for highly liquid assets) the volatility of collateralization ratio can be limited to a few block intervals, thereby reducing the collateralization ratio and improving capital efficiency.

LianGuairtial Block Auction

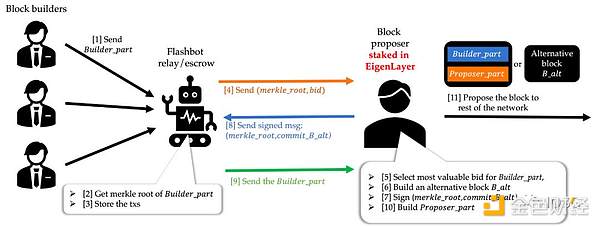

In the current design of MEV-Boost, proposers completely outsource block space to builders and can only passively receive and propose the entire block submitted by the builders. Compared to a wider distribution of proposers, builders are only a small number, and they may collude to review and extort specific transactions because proposers cannot include the transactions they want in MEV-Boost.

EigenLayer proposed an upgrade to MEV-Boost called MEV-Boost++, which introduces Proposer-LianGuairt in the block, allowing proposers to include any transactions in Proposer-LianGuairt. Proposers can also simultaneously construct an alternative block B-alt and propose this alternative block B-alt when the relay does not release Builder_LianGuairt. This flexibility ensures both resistance to censorship and solves the issue of relay activity.

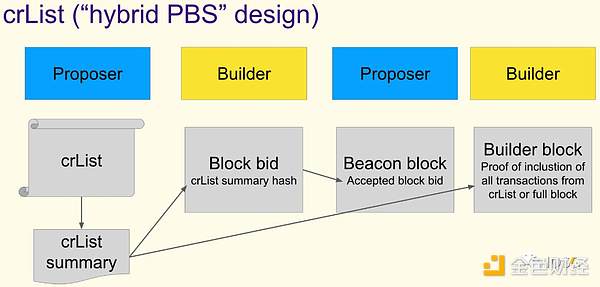

This is consistent with the purpose of the protocol layer design – crList proposed by ePBS, which aims to ensure that a wide range of proposers can participate in determining the composition of blocks to achieve resistance to censorship.

Threshold Encryption

In the MEV solution based on threshold encryption, a group of distributed nodes manage encryption and decryption keys. Users encrypt transactions, which are decrypted and executed only after being included in the block.

However, threshold encryption relies on the assumption of majority honesty. If a majority of nodes behave maliciously, it may result in decrypted transactions not being included in the block. Proposers engaging in re-staking can make credible commitments to encrypted transactions to ensure their inclusion in the block. If a proposer does not include decrypted transactions, they will be penalized. Of course, if a malicious majority of nodes does not release decryption keys, the proposer can propose an empty block.

Long-term BlocksLianGuaice Auction

Long-term block space auction allows buyers of block space to pre-book the future block space of a validator. Validators participating in re-staking can make credible commitments, and if the buyer’s transactions are not included by the expiration, they will be penalized. This guarantee of obtaining block space has some practical use cases. For example, oracle feeds need to be performed at certain time intervals; on Arbitrum, L2 data is published to Ethereum L1 every 1-3 minutes, and on Optimism, it is published every 30 seconds to 1 minute, and so on.

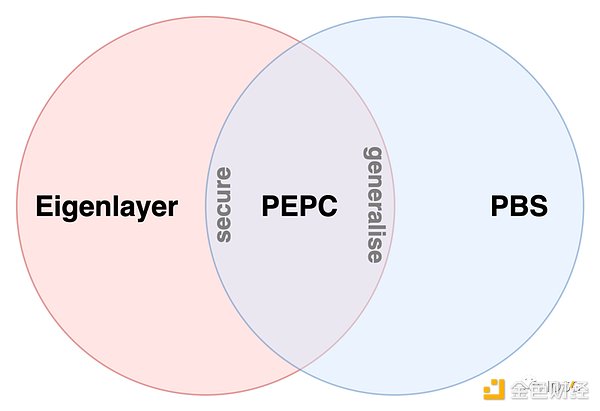

PEPC

Let’s go back to PEPC (Protocol-enforced Proposer Commitment), which has recently been widely discussed in the Ethereum community. PEPC is actually the promotion or generalization of ePBS.

Let’s break down this logical chain step by step.

-

Firstly, taking the off-protocol PBS MEV-Boost as an example, MEV-Boost currently relies on Ethereum’s protocol-level penalty mechanism. If a proposer signs two different block headers at the same block height, they will be penalized. Because proposers need to sign the block headers submitted by the relay, the block header and the proposer are bound together, giving the relay reasons to believe that the builder’s block will be proposed. Otherwise, the proposer can only be forced to give up the slot or propose a different block (which will result in a penalty). The proposer’s commitment is guaranteed by the economic security of staking/penalties.

-

Approximately, a key principle in designing ePBS is “honest builder publication safety,” which ensures that blocks published by honest builders will be proposed. ePBS, as an on-protocol PBS, will be incorporated into Ethereum’s consensus layer, providing guarantees through the protocol.

-

PEPC is a further generalization of ePBS. ePBS promises that “the builder’s block will be proposed.” If we extend this to partial block auctions, parallel block auctions, future block auctions, etc., we can allow proposers to do more, and the protocol layer will ensure that these actions are correctly executed.

There is a subtle relationship between PEPC and EigenLayer. It is not difficult to find that there are some similarities between the use cases of PEPC and the block producer use case of EigenLayer. However, an important difference between EigenLayer and PEPC is that proposers participating in re-staking can theoretically violate their commitments, although they will be economically punished for doing so, while PEPC focuses on “Protocol-enforced”, which means that it enforces commitment at the protocol layer, and if the commitment cannot be executed, the block is invalid.

(PS: Roughly speaking, it is easy to find that EigenDA is similar to Danksharding, and MEV-Boost++ is similar to ePBS. These two services are like opt-in versions designed at the protocol layer, which are faster solutions to be launched in the market compared to the protocol layer, and they keep in sync with what Ethereum is going to do in the future and maintain Ethereum Alignment through re-staking).

Don’t Overload Ethereum Consensus?

Several months ago, Vitalik’s article “Don’t Overload Ethereum Consensus” was widely regarded as criticism of Restaking. I believe that it is just a reminder or warning to maintain social consensus, with the emphasis on social consensus rather than denying re-staking.

In the early days of Ethereum, The DAO attack caused a huge controversy, and the community had intense discussions on whether to perform a hard fork. Now, the Ethereum ecosystem, including Rollup, has already carried a large number of applications. Therefore, it is very important to avoid causing significant divisions within the community and maintain the consistency of social consensus.

Hermione creates a successful layer 2 and argues that because her layer 2 is the largest, it is inherently the most secure, because if there is a bug that causes funds to be stolen, the losses will be so large that the community will have no choice but to fork to recover the users’ funds. High-risk.

The above quote from the original article is a good example. Today, the total TVL of L2 exceeds tens of billions of dollars. If there is a problem, it will have a huge impact. If the community proposes a hard fork to roll back the state, it will inevitably cause a huge controversy. Suppose you and I have a considerable amount of funds on it, how would you choose – retrieve the money or respect the immutability of the blockchain? Vitalik’s point is that projects built on Ethereum should properly manage risks and should not try to influence Ethereum’s social consensus by binding the life or death of the project to Ethereum.

Returning to the discussion of EigenLayer, the key to risk management is that AVS needs to define objective, on-chain traceable, and attributable confiscation rules to avoid disputes. For example, double-signing blocks on Ethereum; signing invalid blocks of another chain in cross-chain bridges based on light nodes; the aforementioned discussion on EigenDA custody proofs, and so on. These are all examples of clear confiscation rules.

Conclusion

EigenLayer is expected to launch its mainnet and release its flagship product EigenDA in early next year. Many infrastructure projects have announced their collaboration with EigenLayer. In the previous discussion, we talked about EigenDA, MEV, and PEPC, and there are still many interesting discussions going on around different use cases. Staking is becoming a mainstream narrative in the market. We will continue to follow the progress of EigenLayer and share any insights!

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!