Author | Mohamed Fouda, AllianceDAO

Translation | Cecilia, bfrenz DAO

Since the launch of ChatGPT and GPT-4, there has been a constant stream of content discussing how AI is completely changing everything, including Web3. Developers from various industries have reported significant increases in productivity, ranging from 50% to 500%, by utilizing ChatGPT as a solution. For example, as an assistant to developers, it can automatically perform tasks such as generating boilerplate code, conducting unit tests, creating documentation, debugging, and detecting vulnerabilities.

While this article will explore how AI enables new and interesting use cases in Web3, more importantly, it will explain the mutually beneficial relationship between Web3 and AI. Few technologies have the potential to have a significant impact on the trajectory of AI development, and Web3 is one of them.

Specific Scenarios of AI Empowering Web3

1. Blockchain Games

Generating bots for non-programmer gamers

Blockchain-based games like Dark Forest have created a unique gameplay where players can gain an advantage by developing and deploying “bots” that perform game tasks. However, this new gameplay may exclude players who are not proficient in programming. The emergence of Language Models (LLMs) powered by artificial intelligence could change this situation. By understanding the rules of blockchain games and then using these rules to create “bots” that reflect player strategies, players no longer need to write the code themselves. For example, projects like Primodium and AI Arena are attempting to allow human players and AI players to participate in games together without the need for complex coding.

Battling or betting with bots

Another possibility in blockchain games is fully automated AI players. In this case, the player themselves is an AI agent, such as AutoGPT, which uses a Language Model (LLM) as a backend and has access to the internet and possible initial cryptocurrency funds. These AI players can participate in prediction games similar to robot battles. This will create a market for players to speculate and bet on the outcomes of these predictions. Such a market may give rise to a completely new gaming experience that is both strategic and appealing to a wider range of players, regardless of their programming skills.

Creating realistic NPC environments for online games

Currently, many games have relatively simplistic NPCs with limited actions and minimal impact on the game progress. However, through the interaction of artificial intelligence and Web3, it is possible to create more immersive NPC environments that disrupt predictability and make games more interesting. One key premise is the introduction of more attractive AI-controlled NPCs.

However, when creating a realistic NPC environment, we face a potential challenge: how to introduce meaningful NPC dynamics while minimizing the throughput (transactions per second, TPS) associated with these activities. High TPS required for NPC activities can lead to network congestion and affect the actual players’ gaming experience.

Through these new gameplay and possibilities, blockchain games are evolving towards a more diverse and inclusive direction, allowing more types of players to participate and experience the fun of the game together.

2. Decentralized Social Media

In today’s world, decentralized social (DeSo) platforms face a challenge in providing a unique user experience compared to existing centralized platforms. However, through seamless integration with AI, we can bring a unique experience to the alternative solution of Web 2. For example, AI-managed accounts can attract new users to the network by sharing relevant content, commenting on posts, and participating in discussions. In addition, AI accounts can also achieve news aggregation, summarizing the latest trends that align with user interests. This integration of AI will bring more innovation to decentralized social media platforms and attract more users to join.

3. Security and Economic Design Testing of Decentralized Protocols

Based on LLM-based AI assistants, we have the opportunity to conduct practical tests on the security and economic robustness of decentralized networks. This agent can define goals, create code, and execute that code, providing a new perspective for evaluating the security and economic design of decentralized protocols. In this process, the AI assistant is guided to exploit the security and economic balance of the protocol. It can first examine protocol documents and smart contracts to identify potential weaknesses. Then, it can independently compete to execute attacks on the protocol’s mechanisms to maximize its own gains. This approach simulates the real-world environment that the protocol may encounter after launch. With these test results, protocol designers can examine the protocol’s design and patch potential weaknesses. Currently, only professional companies like Gauntlet have the technical skills to provide such services for decentralized protocols. However, through training LLM in Solidity, DeFi mechanisms, and past exploit mechanisms, we expect AI assistants to provide similar functionalities.

4. LLM for Data Indexing and Metric Extraction

Although blockchain data is public, indexing and extracting useful insights have always been a challenge. Some participants, such as CoinMetrics, specialize in indexing data and building complex metrics for sale, while others, such as Dune, focus on indexing the primary components of raw transactions and extracting partial metrics through community contributions. Recent advancements in LLM indicate that data indexing and metric extraction could undergo a revolution. Blockchain data company Dune has recognized this potential threat and announced that the LLM roadmap includes potential components for SQL query interpretation and NLP-based queries. However, we predict that the impact of LLM will be more profound. One possibility is LLM-based indexing, where the LLM model can directly interact with blockchain nodes to index data for specific metrics. Startups like Dune Ninja have already begun exploring innovative data indexing applications based on LLM.

5. Developer Participation in New Ecosystem

Different blockchain networks attract developers to build applications. Web3 developer activity is one of the important indicators to assess the success of an ecosystem. However, developers often encounter difficulties in terms of support when they start learning and building new ecosystems. The ecosystem has invested millions of dollars through dedicated Dev Rel teams to support developers in better exploring the ecosystem. In this field, the emerging LLM has shown amazing achievements, as it can explain complex code, capture errors, and even create documentation. Fine-tuned LLM can supplement human experience, significantly improving the productivity of development teams. For example, LLM can be used to create documents, tutorials, answer frequently asked questions, or even provide template code or create unit tests for developers in hackathons. All of these will help promote active developer participation and drive the growth of the entire ecosystem.

6. Improving DeFi Protocols

By integrating AI into the logic of DeFi protocols, the performance of many DeFi protocols can be significantly enhanced. Currently, one of the main challenges in applying AI to the DeFi field is the high cost of implementing AI on-chain. Although AI models can be implemented off-chain, the execution of the models could not be previously verified. However, through projects like Modulus and ChainML, off-chain execution verification is gradually becoming a reality. These projects allow machine learning models to be executed off-chain while limiting on-chain cost overhead. In the case of Modulus, on-chain costs are only used for verifying the zero-knowledge proof (ZKP) of the model. In the case of ChainML, on-chain costs are used for paying oracle fees to decentralized AI execution networks.

Here are some DeFi use cases that could benefit from AI integration:

-

AMM Liquidity Allocation: For example, updating the liquidity range of Uniswap V3. By integrating artificial intelligence, the protocol can adjust the liquidity range more intelligently, thereby improving the efficiency and returns of the automated market maker (AMM).

-

Liquidation Protection and Debt Positions: By combining on-chain and off-chain data, more effective liquidation protection strategies can be implemented to protect debt positions from market fluctuations.

-

Complex DeFi Structured Products: When designing vault mechanisms, financial artificial intelligence models can be relied upon instead of fixed strategies. Such strategies may include trades, loans, or options managed by artificial intelligence, thereby increasing the intelligence and flexibility of the product.

-

Advanced On-Chain Credit Scoring Mechanism: Considering the different situations of wallets on different blockchains, integrating artificial intelligence can help build a more accurate and comprehensive credit scoring system, thereby better assessing risks and opportunities.

By leveraging these AI integration use cases, the DeFi field can better adapt to evolving market demands, improve efficiency, reduce risks, and create more value for users. At the same time, with the continuous development of off-chain verification technology, the prospects of AI in DeFi will also expand further.

Web3 Technology Can Help Improve the Capability of AI Models

Although existing AI models have demonstrated tremendous potential, they still face challenges in data privacy, fairness in executing specific models, and the creation and dissemination of fake content. In these areas, Web3 technology’s unique advantages may play an important role.

1. Creating Exclusive Datasets for ML Training

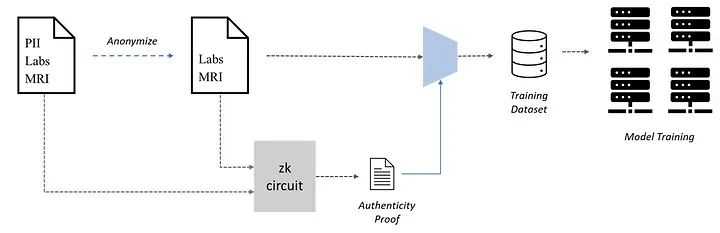

Web3 can assist in one area of AI, which is collaboratively creating exclusive datasets for machine learning (ML) training, specifically through the use of Proof of Private Work (PoPW) networks for dataset creation. Massive datasets are crucial for accurate ML models, but acquiring and creating these datasets can become a bottleneck, especially in use cases that require private data, such as using ML for medical diagnosis, where privacy concerns surrounding patient data pose significant obstacles as accessing medical records is required to train these models. However, due to privacy considerations, patients may be unwilling to share their medical records. To address this issue, patients can undergo verifiable anonymization of their medical records, protecting their privacy while still allowing the use of these records in machine learning training.

However, the authenticity of anonymized data may be a concern, as fake data can greatly impact model performance. In this case, zero-knowledge proofs (ZKPs) can be used to verify the authenticity of anonymized data. Patients can generate ZKPs to prove that anonymous records are indeed copies of the original records, even after personal identifiable information (PII) has been removed. This approach both protects privacy and ensures the credibility of the data.

2. Running Inference on Private Data

Currently, large language models (LLMs) face a significant issue in handling private data. For example, when interacting with ChatGPT, OpenAI collects users’ private data and uses it for model training, leading to the potential leakage of sensitive information. In recent cases, employees accidentally leaked classified data while using ChatGPT for work, highlighting this problem even more. Zero-knowledge (ZK) technology holds promise in addressing the issues that arise when machine learning models handle private data. Here, we will explore two scenarios: open-source models and proprietary models.

For open-source models, users can download the models and run them on their local private data. For example, Worldcoin’s “World ID” upgrade plan (“ZKML”) requires processing users’ biometric data, such as iris scans, to create a unique identifier (IrisCode) for each user. In this case, users can download the machine learning model generated from IrisCode, ensuring the privacy of their biometric data, and run it locally. By creating zero-knowledge proofs (ZKPs), users can prove that they have successfully generated the IrisCode, ensuring the authenticity of the inference while protecting data privacy. It is important to note that efficient ZK proof mechanisms, such as those developed by Modulus Labs, play a critical role in training machine learning models.

Another scenario is when the machine learning model used for inference is proprietary, the situation becomes slightly more complex. This is because local inference may not be an option. However, zero-knowledge proofs (ZKPs) can help address the problem in two possible ways. The first approach is to anonymize user data using ZKPs before sending the anonymized data to the machine learning model, as discussed earlier in the case of dataset creation. The second approach is to locally preprocess private data before sending the preprocessed output to the machine learning model. In this case, the preprocessing steps hide the user’s private data, making it unreconstructable. Users can generate ZKPs to prove the correct execution of the preprocessing steps, while other proprietary parts of the model can be executed remotely on the model owner’s server. Examples of such use cases may include AI doctors capable of analyzing patient medical records for potential diagnoses and algorithms for assessing customer’s private financial information for financial risk assessment.

With ZK technology, Web3 can provide greater data privacy protection, making AI more secure and reliable when dealing with private data, while also offering new possibilities for AI applications in privacy-sensitive areas.

3. Ensuring Content Authenticity and Combating Deepfake Scams

The emergence of ChatGPT may have overshadowed the fact that there are generative AI models focused on generating images, audio, and videos. However, these models are now capable of generating realistic deepfake content. For example, AI-generated portrait photos and AI-generated versions of Drake’s new songs have been widely circulated on social media. Due to people’s innate tendency to believe what they see and hear, these deepfake contents can pose potential scam risks. While some start-ups attempt to address the issue using Web2 technologies, Web3 technologies such as digital signatures may offer a more effective solution.

In Web3, transactions between users are signed with the user’s private key to prove their validity. Similarly, text, images, audio, and video content can also be digitally signed by the creators’ private keys to prove their authenticity. Anyone can verify the signature by using the creator’s public address, which can be found on the creator’s website or social media accounts. The Web3 network has established all the necessary infrastructure to meet the demand for content verification. Some investors have already associated their social media profiles, such as Twitter, or decentralized social media platforms like Lens Protocol and Mirror, with encrypted public addresses to increase the credibility of content verification. For example, Fred Wilson, a partner at the top US investment firm USV, discussed how associating content with public encryption keys can help combat fake information.

Although the concept may seem simple, there is still much work to be done to improve the user experience of the authentication process. For example, the digital signing process for content needs to be automated to provide a seamless and smooth experience for creators. Another challenge is how to generate subsets of signed data, such as audio or video clips, without the need for resigning. Currently, many projects are working to address these issues, and Web3 has unique advantages in solving these problems. Through technologies like digital signatures, Web3 has the potential to play a crucial role in protecting content authenticity and combating deepfake content, thereby increasing user trust and the credibility of the online environment.

4. Minimizing Trust in Proprietary Models

Web3 technology can also achieve the maximum reduction of trust in proprietary machine learning (ML) models when they are offered as a service. Users may want to verify the service they have paid for or obtain guarantees about the fair execution of the ML model, i.e., the same model is used for all users. Zero-knowledge proofs (ZKP) can be used to provide these guarantees. In this architecture, the creator of the ML model generates a ZK circuit representing the ML model. Then, when needed, this circuit is used to generate zero-knowledge proofs for the user’s inference. These proofs can be sent to the user for verification or published on the public chain responsible for handling user verification tasks. If the ML model is private, an independent third party can verify whether the used ZK circuit represents the model. This trust-minimizing approach is especially useful when the execution results of the model carry high risks. Here are some specific use cases:

Machine Learning Applications for Medical Diagnosis

In this case, patients submit their medical data to an ML model for potential diagnosis. Patients need to ensure that the target ML model does not misuse their data. The inference process can generate a zero-knowledge proof to prove the correct execution of the ML model.

Loan Credit Assessment

ZKP can ensure that banks and financial institutions consider all the financial information submitted by applicants when evaluating creditworthiness. Additionally, by proving that all users use the same model, ZKP can demonstrate fairness.

Insurance Claims Processing

Current insurance claims processing is manual and subjective. However, ML models can assess insurance policies and claim details more fairly. Combined with ZKP, these claims processing ML models can be proven to consider all policy and claim details, with the same model used for processing all claims under the same policy.

By leveraging technologies such as zero-knowledge proofs, Web3 is expected to provide innovative solutions to the trust problem of proprietary ML models. This not only helps improve user trust in model execution but also promotes fairer and more transparent transaction processes.

5. Solving the Centralization Problem of Model Creation

Creating and training LLM (Large Language Models) is a time-consuming and expensive process that requires specialized domain knowledge, dedicated computing infrastructure, and millions of dollars in computational costs. These characteristics can lead to powerful centralized entities, such as OpenAI, which can exercise significant power over their users by controlling access to their models.

In light of these centralization risks, important discussions are taking place regarding how Web3 can promote the decentralization of model creation in various aspects. Some Web3 advocates propose decentralized computing as a way to compete with centralized participants. This viewpoint suggests that decentralized computing can be a cheaper alternative. However, our perspective is that this may not be the optimal angle to compete with centralized participants. The drawback of decentralized computing is that ML training can be 10 to 100 times slower due to communication overhead between different heterogeneous computing devices.

One approach is to distribute the cost and resources of model creation through decentralized computing. While decentralized computing may be seen as a cheaper alternative to centralized entities, the issue of communication overhead may limit its efficiency. This means that decentralized computing may result in slower training speeds when it comes to large-scale computing tasks. Therefore, when seeking to address the centralization problem in model creation, the pros and cons of decentralized computing need to be carefully weighed.

Another approach is to adopt Proof of Private Work (PoPW) to create unique and competitive ML models. The advantage of this approach is that it can achieve decentralization by distributing the dataset and computing tasks to different nodes in the network. These nodes can contribute to model training while maintaining their own data privacy. Projects such as Together and Bittensor are developing in this direction, attempting to achieve decentralization in model creation through PoPW networks.

Payment and Execution Tracks for AI Agents

The payment and execution tracks for AI agents have attracted a great deal of attention in recent weeks. The trend of using Large Language Models (LLMs) to perform specific tasks and achieve goals is on the rise, originating from the concept of BabyAGI and quickly spreading to advanced versions such as AutoGPT. This has led to an important prediction that in the future, AI agents will excel and become more specialized in certain tasks. If a dedicated market emerges, AI agents have the ability to search, hire, and pay the costs of other AI agents, thereby collaborating on important projects.

In this process, the Web3 network provides an ideal environment for AI agents. Especially in terms of payment, AI agents can configure cryptocurrency wallets to receive payments and make payments to other agents, enabling task division and collaboration. In addition, AI agents can delegate resources without permission and inject themselves into encrypted networks. For example, if an AI agent needs to store data, they can create a Filecoin wallet and pay storage fees on the decentralized storage network IPFS. Additionally, AI agents can delegate computing resources from decentralized computing networks like Akash to perform specific tasks and even expand their execution scope.

Preventing AI Privacy Violations

However, in this development process, privacy and data protection issues become particularly important. Given that training high-performance machine learning models requires a large amount of data, it can be safely assumed that any public data will enter machine learning models, which can use this data to predict individual behaviors. Especially in the financial sector, building machine learning models can lead to the violation of users’ financial privacy. To address this issue, some privacy protection technologies such as zCash, Aztec payments, and private DeFi protocols like Penumbra and Aleo can be used to ensure user privacy protection. These technologies can enable transactions and data analysis while protecting user data, thus achieving a balance between financial transactions and the development of machine learning models.

Conclusion

We believe that Web3 and AI are culturally and technologically compatible. Unlike the resistance towards robots in Web2, Web3, with its permissionless programmability, creates opportunities for the flourishing development of artificial intelligence.

From a broader perspective, if blockchain is seen as a network, artificial intelligence is expected to play a dominant role at the edge of this network. This viewpoint applies to various consumer applications, from social media to gaming. So far, the edge of the Web3 network has been mainly composed of humans. Humans initiate and sign transactions or let robots act on their behalf through pre-set strategies.

Over time, we can foresee the emergence of more and more AI assistants at the network edge. These AI assistants will interact with humans and each other through smart contracts. We believe that these interactions will bring new consumer and user experiences, potentially triggering innovative application scenarios.

The permissionless nature of Web3 gives artificial intelligence greater freedom to integrate more closely with blockchain and distributed networks. This is expected to promote innovation, expand application areas, and create more personalized and intelligent experiences for users. At the same time, it is important to closely monitor privacy, security, and ethical issues to ensure that the development of artificial intelligence does not have negative impacts on users, but truly achieves a harmonious coexistence of technology and culture.

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!