The rapid development of AI in various fields has indeed exceeded the expectations of most people. Last week, Sequoia (Sequoia Capital US) believed that AI has entered the second chapter and presented a new AI map and LLM developer stack from the perspective of application scenarios.

However, from the perspective of capital flow, the development of AI seems to still be in a stage of intense competition, and this pattern seems to have already taken shape. After OpenAI received a valuation of nearly $29 billion with an investment of approximately $11 billion from Microsoft and others, its competitor Anthropic announced a partnership with Amazon yesterday. Amazon will invest up to $4 billion, making Anthropic the second largest AI startup after OpenAI in terms of financing.

After this financing, apart from Apple, the AI industry has basically formed a pattern of intense competition:

-

Microsoft + OpenAI

-

Google + DeepMind

-

Meta + MetaAI

-

Amazon + Anthropic

-

Tesla + xAI

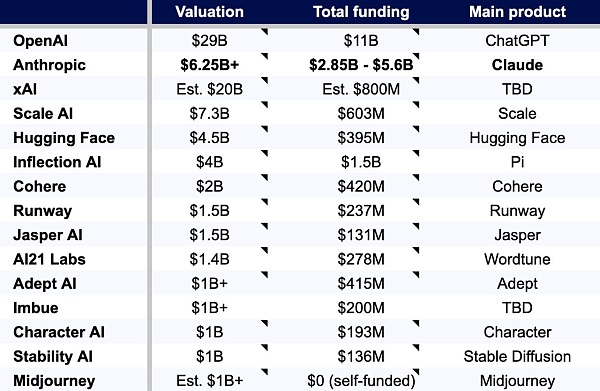

Of course, as the provider of underlying infrastructure, Nvidia’s strategy is obviously to support all parties and does not heavily align with any specific company. Below is a rough overview of 15 AI unicorns. From the perspective of valuation and financing, large-scale language models (LLMs) account for a large proportion, and 50% of AI unicorns were founded after 2021:

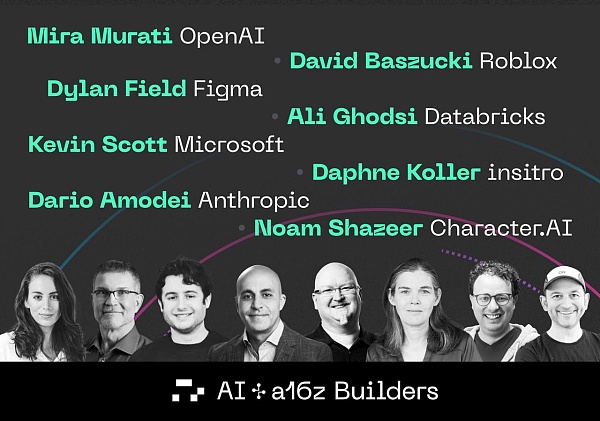

Today, a16z shared their conversations with several top AI company founders, believing that we are in the third era of computing and exploring 16 interesting topics from the current and future perspectives of AI, as well as openness. The participants in this conversation include:

-

Martin Casado, Partner at a16z

-

Mira Murati, CTO of OpenAI

-

David Baszucki, Cofounder & CEO of Roblox

-

Dylan Field, Cofounder & CEO of Figma

-

Dario Amodei, Cofounder & CEO of Anthropic

-

Kevin Scott, CTO & EVP of AI at Microsoft

-

Daphne Koller, Founder & CEO of insitro

-

Ali Ghodsi, Cofounder & CEO of Databricks

-

Noam Shazeer, Cofounder & CEO of Character.AI

Since the article is too long, about 10,000 words, I have done a simple compilation using AI. Some terms may not be accurate. Interested friends can read the original English article:

1 We are at the beginning of the third era of computing

Martin Casado, a16z:

Noam Shazeer, Character AI:

Kevin Scott, Microsoft:

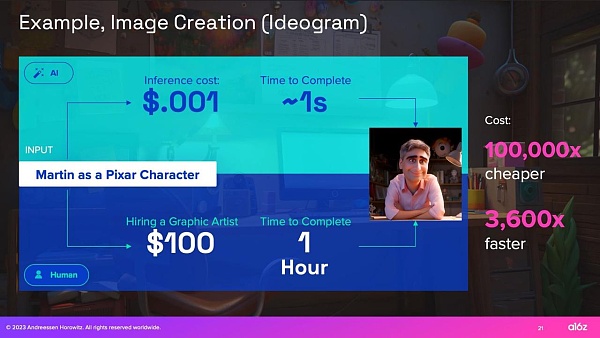

2 This wave of generative AI has economic principles that drive market transformation

In order for technological innovation to trigger market transformation, economic benefits must be compelling. Although there have been many technological advancements in previous cycles of artificial intelligence, there has been a lack of transformative economic benefits. In the current wave of artificial intelligence, we have already seen early signs of economic benefits increasing by 10,000 times (or even more) in some application cases, and the adoption and development of artificial intelligence as a result seems to be much faster than any previous transformation.

Martin Casado, a16z:

3 For some early application scenarios: Creativity > Accuracy

Illusion is a well-known issue with large language models (LLMs) today, but for certain application scenarios, the ability to fabricate things is a feature rather than an error. Compared to early applications in machine learning use cases where n-level accuracy is crucial (such as autonomous vehicles), many early use cases of LLMs (virtual friends and companions, brainstorming concepts, or building online games) have the following characteristics: they focus on areas where creativity is more important than accuracy.

Noam Shazeer, Character.AI:

David Baszucki, Roblox:

Dylan Field, Figma:

4 For other analogies, programming “co-pilots” improve with human usage

Although artificial intelligence has the potential to enhance human work in many fields, programming “co-pilots” have become one of the first widely adopted AI assistants for several reasons:

First, developers are often early adopters of new technologies – an analysis of ChatGPT prompts from May/June 2023 found that 30% of ChatGPT prompts are related to programming. Second, the largest LLMs have been trained on datasets that include a substantial amount of code (e.g. the internet), making them particularly adept at responding to programming-related queries. Finally, humans are in the loop as users. So while accuracy is important, human developers with AI co-pilots can iterate to correctness faster than individual human developers.

Martin Casado, a16z:

When developers can query AI chatbots to help them write code and troubleshoot it, it changes development in two significant ways: 1) it makes collaboration in development easier for more people, as it is done through a natural language interface, and 2) human developers produce more and stay in flow longer.

Mira Murati, OpenAI:

Kevin Scott, Microsoft:

Dylan Field, Figma:

5 The combination of AI and biology can accelerate new approaches to treating diseases and have profound impacts on human health

Biology is extremely complex – perhaps beyond complete human comprehension. However, the intersection of artificial intelligence and biology can accelerate our understanding of biology and bring about some of the most exciting and transformative technological advancements of our time. AI-driven biological platforms have the potential to unlock previously unknown biological insights, leading to new medical breakthroughs, new diagnostic methods, and the ability to detect and treat diseases earlier, and even potentially prevent them before they occur.

Daphne Koller, insitro:

6 Putting the Model into the Hands of Users Will Help Us Discover New Use Cases

Previous iterations of AI models aimed to surpass humans in certain tasks, while Transformer-based LLMs excel at general reasoning. However, just because we have created a good general model does not mean we have cracked how to apply it to specific use cases. Just as involving humans in the loop in RLHF form is crucial for improving the performance of today’s AI models, putting new technology into the hands of users and understanding how they use it will be key to determining what applications to build on top of these foundational models.

Kevin Scott, Microsoft:

Mira Murati, OpenAI:

7 Your AI Friend’s Memory Will Get Better

While data, computation, and model parameters power the general reasoning of LLMs, context windows power their short-term memory. Context windows are typically measured by the number of tokens they can handle. Today, most context windows are around 32K, but larger context windows are on the horizon, enabling LLMs to process larger documents with more context.

Noam Shazeer, Character.AI:

Dario Amodei, Anthropic:

8 Voice chatbots, robots, and other ways of interacting with AI are an important area of research

Today, most people interact with AI in the form of chatbots, but that’s because chatbots are typically easy to build, not because they are the best interface for every use case.

Many builders are focused on developing new ways for users to interact with AI models through multimodal AI. Users will be able to interact with multimodal models in ways similar to how they interact with the world elsewhere: through images, text, speech, and other media. Taking it a step further, embodied AI focuses on AI that can interact with the physical world, such as autonomous vehicles.

Mira Murati, OpenAI:

Noam Shazeer, Character.AI:

Daphne Koller, insitro:

9 Will we have a few general models, a bunch of specialized models, or a mix of both?

Which use cases are best suited for larger “higher IQ” foundational models or smaller specialized models and datasets? Like the cloud vs. edge architecture debate from a decade ago, the answer depends on how much cost you’re willing to pay, the accuracy you need for output, and the level of latency you can tolerate. Over time, the answers to these questions may change as researchers develop more computationally efficient methods to fine-tune large foundational models for specific use cases.

In the long run, we may see an over-rotation in which models are used for which use cases, as we are still in the early stages of building the infrastructure and architecture to support the upcoming wave of AI applications.

Ali Ghodsi, Databricks:

Dario Amodei, Anthropic:

Mira Murati, OpenAI:

David Baszucki, Roblox:

10 When will artificial intelligence gain enough promotion in enterprises, and what will happen to those datasets?

The impact of generative artificial intelligence on enterprises is still in its early stages – partly because enterprises tend to act slowly, and partly because they have realized the value of their proprietary datasets and may not want to transfer the data to another company, no matter how powerful their models are. Most enterprise use cases require a high level of accuracy, and enterprises have three options to choose LLM: building their own LLM, using LLM service providers to build for them, or fine-tuning the base model – building their own LLM is not easy.

Ali Ghodsi, Databricks:

11 Will the scaling law lead us to AGI?

LLM currently follows the scaling law: even if you add more data and computation, the model performance will improve, even if the architecture and algorithms remain unchanged. But how long can this law last? Will it continue indefinitely, or will it reach its natural limit before we develop AGI?

Mira Murati, OpenAI:

Dario Amodei, Anthropic:

Noam Shazeer, Character.AI:

12 What are emergent capabilities?

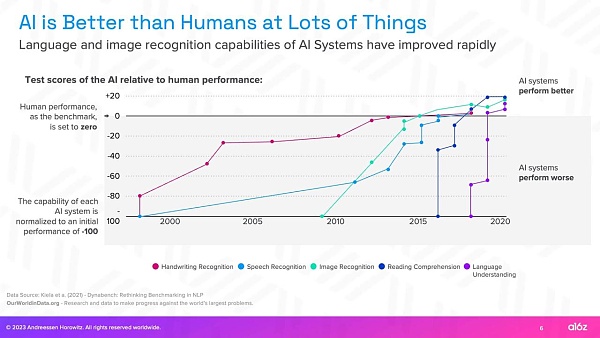

While some people quickly dismissed the capabilities of generative AI, AI has already surpassed humans in performing certain tasks and will continue to improve. The best builders have been able to identify the most promising emerging capabilities of AI and build models and companies to extend these capabilities into reliable functions. They recognize that scale often increases the reliability of emergent capabilities.

Mira Murati, OpenAI:

Dario Amodei, Anthropic:

13 Will the cost of servicing these models decrease?

The cost of computation is one of the main constraints in scaling these models, and the current chip shortage has pushed up costs by limiting supply. However, if Nvidia produces more H100 next year, this should alleviate the GPU shortage and may reduce the cost of computation.

Noam Shazeer, Character.AI:

Dario Amodei, Anthropic:

However, even if the cost of computation remains unchanged, improvements in model-level efficiency seem inevitable, especially with so many talents entering the field, AI itself may be the most powerful tool for improving the way AI works.

Dario Amodei, Anthropic:

One of the most promising research areas is fine-tuning large models for specific use cases without running the entire model.

Ali Ghodsi, Databricks:

14 How do we measure the progress of general artificial intelligence?

As we scale these models, how do we know when AI becomes general artificial intelligence? When we often hear the term AGI, it may be something difficult to define, partly because it is difficult to measure.

Quantitative benchmarks like GLUE and SUPERGLUE have long been used as standardized metrics for measuring AI model performance. However, just like standardized tests we give to humans, AI benchmarks raise a question: to what extent are you measuring the reasoning ability of LLM, and to what extent are you measuring its ability to pass exams?

Ali Ghodsi, Databricks:

The initial qualitative test for AGI is the Turing test, but convincing humans that AI is human is not the problem. The challenge is to make AI do what humans do in the real world. So, what tests can we use to understand the capabilities of these systems?

Dylan Field, Figma:

David Baszucki, Roblox:

15 Does it still require human involvement?

New technologies often replace some human jobs and tasks, but they also open up entirely new fields, increase productivity, and allow more people to engage in different types of work. While it’s easy to imagine AI automating existing jobs, imagining the next set of problems and possibilities that AI brings is much harder.

Martin Casado, a16z:

Kevin Scott, Microsoft:

Dylan Field, Figma:

16 Now is the most exciting time to start an AI startup (especially if you’re a physicist or mathematician)

It’s a unique and exciting time to build AI: foundational models are rapidly expanding, the economy is tilting in favor of startups, and there are many problems to solve. These problems require great patience and perseverance, and so far, physicists and mathematicians are particularly well-suited to address them. But as a rapidly evolving young field, AI is wide open—now is the best time to build AI.

Dario Amodei, Anthropic:

Mira Murati, OpenAI:

Daphne Koller, insitro:

Kevin Scott, Microsoft:

Like what you're reading? Subscribe to our top stories.

We will continue to update Gambling Chain; if you have any questions or suggestions, please contact us!